The distributed launch utility seems like unstable in usage.

Executing the same program once with the following command

python -m torch.distributed.launch --nproc_per_node=3 --nnodes=1 --node_rank=0 --master_addr="127.0.0.1" --master_port=62123 main.py

Works fine:

1.0, 0.05, 2.1814, 0.1697, 2.0053, 0.2154

1.0, 0.05, 2.1804, 0.1674, 1.9767, 0.2406

1.0, 0.05, 2.1823, 0.1703, 1.9799, 0.2352

2.0, 0.05, 2.1526, 0.1779, 2.1166, 0.1908

2.0, 0.05, 2.1562, 0.1812, 2.0868, 0.2076

2.0, 0.05, 2.1593, 0.1741, 2.0935, 0.192

3.0, 0.05, 1.9386, 0.2413, 1.8037, 0.3017

3.0, 0.05, 1.9319, 0.2473, 1.8041, 0.2903

3.0, 0.05, 1.9286, 0.2443, 1.815, 0.2939

4.0, 0.05, 1.7522, 0.3153, 1.828, 0.3131

4.0, 0.05, 1.7504, 0.3207, 1.7613, 0.3245

After the program is finished executing again the same command i.e., calling launch with the same arguments results in an error

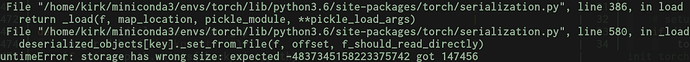

File "/home/kirk/miniconda3/envs/torch/lib/python3.6/site-packages/torch/serialization.py", line 386, in load

return _load(f, map_location, pickle_module, **pickle_load_args)

File "/home/kirk/miniconda3/envs/torch/lib/python3.6/site-packages/torch/serialization.py", line 580, in _load

deserialized_objects[key]._set_from_file(f, offset, f_should_read_directly)

RuntimeError: storage has wrong size: expected 4333514340733757174 got 256