kirk86

September 13, 2019, 3:56pm

1

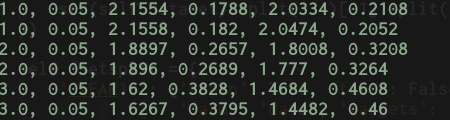

How can I prevent my log messages to not get printed multiple times when I use distributed training?

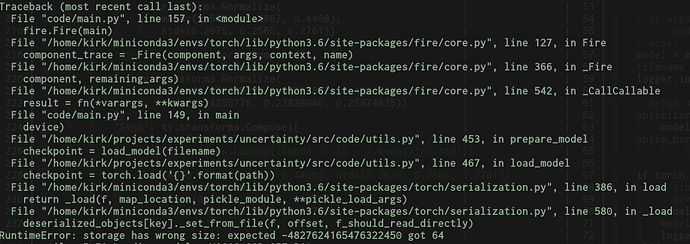

Also, I keep getting an error every time I set OMP_NUM_THREADS > 1

Any thoughts into what might have gone wrong here?

kirk86

September 13, 2019, 10:02pm

2

I managed to solve the error I was getting when using OMP_NUM_THREADS > 1init_method="env://" in the call to the process_group

torch.distributed.init_process_group(backend='nccl', init_method='env://')

The other thing that I was missing is that when calling the launch utility you have to pass a random port otherwise I would get the above error

python -m torch.distributed.launch --nproc_per_node=number of gpus --master_port=some random high number port main.py