Hi all,

I have been using DataParallel so far to train on single-node multiple machines. As i have seen on the forum here that DistributedDataParallel is preferred even for single node and multiple GPUs. So i switched to Distributed training.

My network is kind of large with numerous 3D convolutions so i can only fit a batch size of 1 (stereo image pair) on a single GPU.

I have noticed that the time taken by BackwardPass increases from 0.7 secs to 1.3 secs.

I am have setup the distributed setup as following.

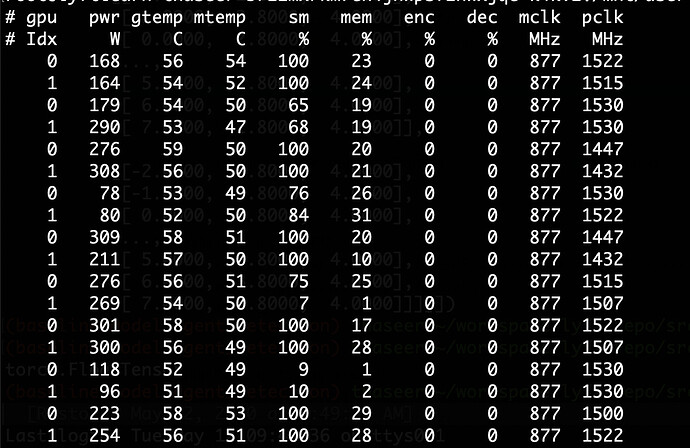

Also GPU utilization is low. Can you kindly suggest what shall be done to increase GPU utilization and reduce backward pass time.

p.s DataLoading does not seem to be the bottleneck as it currently takes 0.08 secs.

if config.distributed_training.enable:

logging.info(f"spawning multiprocesses with {config.distributed_training.num_gpus} gpus")

multiprocessing.spawn( # type: ignore

_train_model,

nprocs=config.distributed_training.num_gpus,

args=(pretrained_model, config, train_dataset, output_dir),

)

def _train_model(

gpu_index: int, pretrained_model: str, config: CfgNode, train_dataset: Dataset, output_dir: Path

) -> None:

train_sampler = None

world_size = _get_world_size(config)

local_rank = gpu_index

if config.distributed_training.enable:

local_rank = _setup_distributed_process(gpu_index, world_size, config)

train_sampler = torch.utils.data.DistributedSampler(

train_dataset, num_replicas=world_size, rank=local_rank

)

model = MyModel(config)

torch.cuda.set_device(local_rank)

_transfer_model_to_device(model, local_rank, gpu_index, config)

.........

def _setup_distributed_process(gpu_index: int, world_size: int, config: CfgNode) -> int:

logging.info("Setting Distributed DataParallel ....")

num_gpus = config.distributed_training.num_gpus

local_rank = config.distributed_training.ranking_within_nodes * num_gpus + gpu_index

torch.cuda.set_device(local_rank)

_init_process(rank=local_rank, world_size=world_size, backend="nccl")

logging.info(f"Done...")

return local_rank

def _init_process(rank: int, world_size: int, backend="gloo"):

""" Initialize the distributed environment. """

os.environ["MASTER_ADDR"] = "localhost"

os.environ["MASTER_PORT"] = "29500"

dist.init_process_group( # type:ignore

backend=backend, init_method="env://", world_size=world_size, rank=rank

)

def _transfer_model_to_device(model: nn.Module, local_rank: int, gpu_index: int, config: CfgNode) -> None:

if config.distributed_training.enable:

model.cuda(local_rank)

model = torch.nn.parallel.DistributedDataParallel(

model, device_ids=[local_rank], output_device=[local_rank] # type:ignore

)

elif torch.cuda.device_count() > 1:

model = torch.nn.DataParallel(model).cuda()

else:

torch.cuda.set_device(gpu_index)

model = model.cuda(gpu_index)

GPU utilization is the following