Hi, I am following the distributed data parallel tutorial for training on mulitple gpus. I am using an HPC server to run codes, requesting 2 gpus in a single node.

I have managed to succesfully run the code in the distributed tutorial here Getting Started with Distributed Data Parallel — PyTorch Tutorials 2.6.0+cu124 documentation with the mp.spawn syntax.

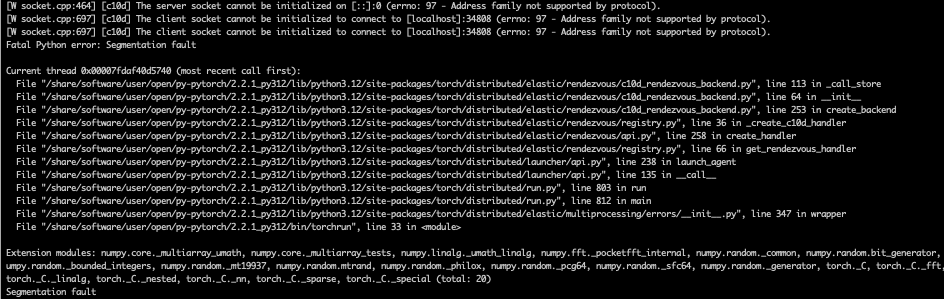

However, I get an error when I initialize it with torchrun, getting the error below:

Here is the code I use to run it:

torchrun --nnodes=1 --nproc_per_node=2 --standalone distributed_tutorial_script.py

Any help, hints or pointers are very much appreciated.

Thanks!