Hi

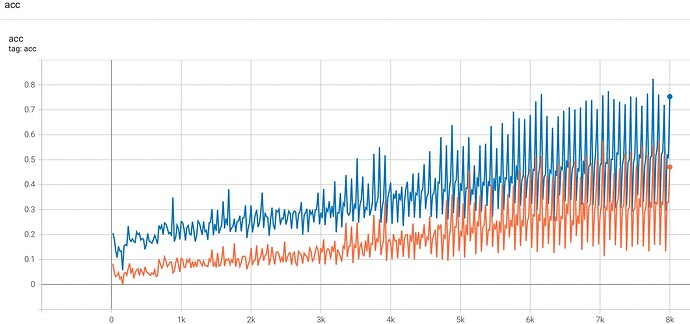

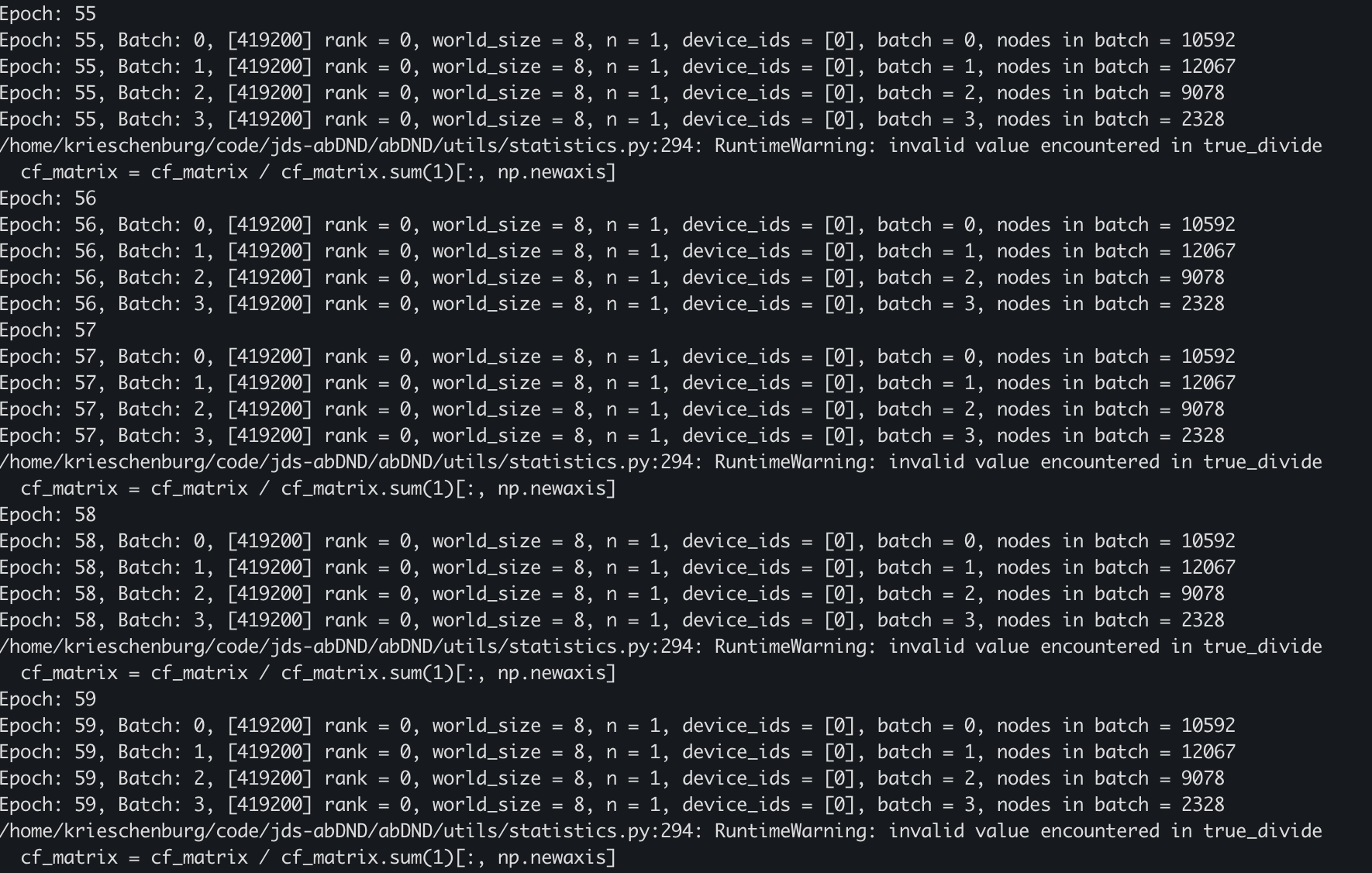

I’m trying to use the DistributedSampler class with the argument shuffle=True. However, it seems that my batches are not being shuffled across epochs and across devices. See the attached file “TrainingPrintOut.png”: I’m printing out the number of nodes per batch assigned to GPU device with rank 0 – across two different epochs, each batch assigned to device with rank 0 has the same number of nodes for each corresponding iterant, which (correct me if I’m wrong) indicates that the batches / data aren’t being shuffled. One can also see this effect in the training performance metrics (see second attached file, “TrainingAccuracy.png”), which show consistent periodic spikes and valleys in the estimated metrics as a function of batch.

Here is the code for how I’m loading the datasets, DataLoaders, and Distributed Samplers:

DATASET_MAP = {'D1': datasets.D1_Dataset,

'D2': datasets.D2_Dataset}

# load datasets

datasets = {subset: DATASET_MAP[args.dataset](root=root_dir,

data_subset=subset,

transform=xfm,

in_memory=args.in_memory,

feature_type=args.feature_type,

n_samples=sample_sizes[subset],

verbose=0)

for subset in ['train', 'val']}

# set up data samplers

samplers = {subset: DistributedSampler(datasets[subset],

rank=local_rank,

shuffle=True)

for subset in ['train', 'val']}

# set up dataloaders

loaders = {subset: DataLoader(datasets[subset],

batch_size=args.batch_size,

num_workers=0,

sampler=samplers[subset])

for subset in ['train', 'val']}

Can anyone shed light on how to actually shuffle the dataset when using DistributedSampler?

Thanks.

Kristian