I have to deploy some PyTorch models (around 4) in production, for demonstration purpose I have created a minimal code showing how I am deploying the models using Flask and Docker Swarm

Problem is that when I load test the API endpoints I found that memory utilization of container/service increases and it crashes after some time, I tried increasing the container memory limit in a docker-compose.yml file but it only extends crashing time

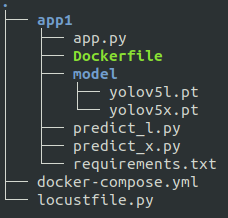

Here is the complete code