Hi,

I’m studying how to use FSDP effectively from the following video:

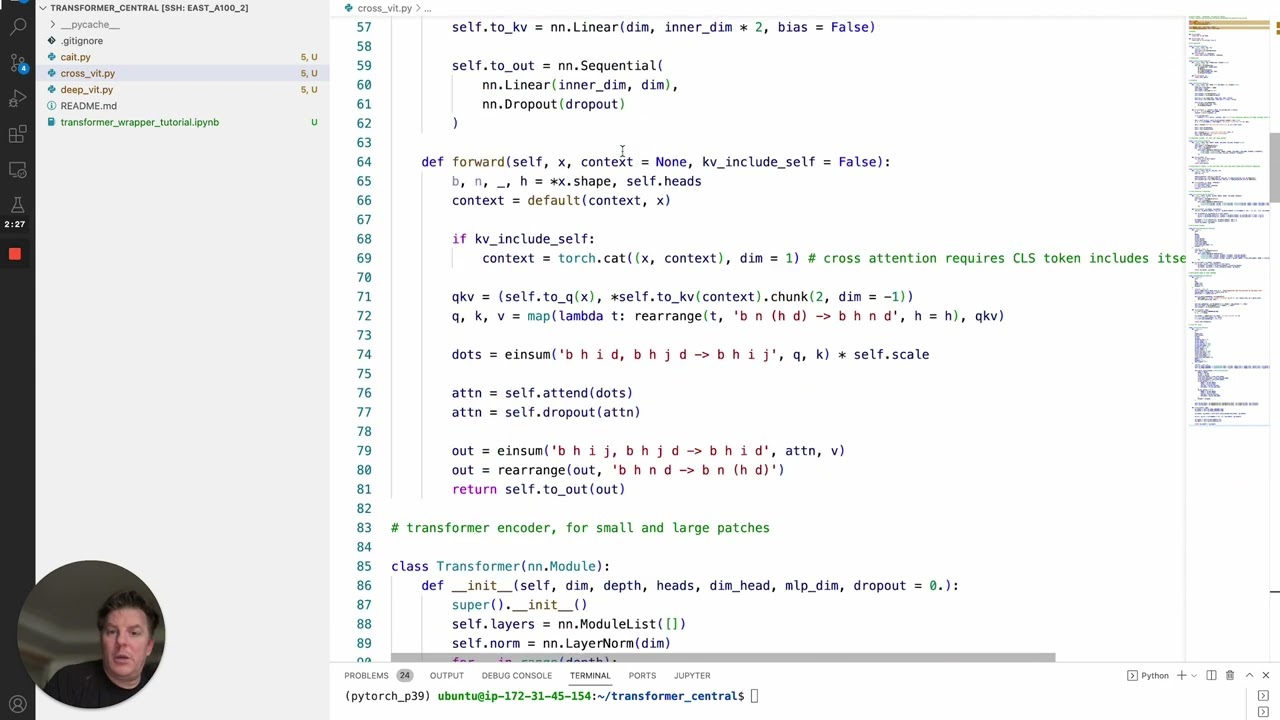

In summary, the video teaches how to choose layers to be sharded with transformer_auto_wrap_policy. He mentions that we should shard layer classes that wrap multihead attention and feedforward layers.

I have one question that hasn’t been made clear in the video: When we fine-tune an available model with most of the parameters frozen, can we shard the frozen layers across ranks even if they are not trainable and don’t have gradients and optimizer states?

An example could be an LLM where all layers are frozen and only the LM head is trainable, if we still do what told in the video above, are there still benefits?

Thank you.