I was not sure how to title this.

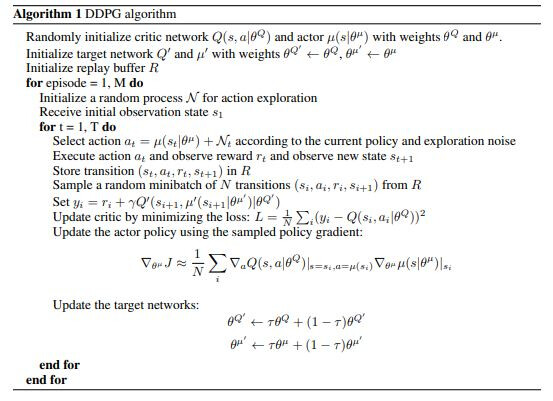

Let’s start with an example assume we have DDPG algorithm as shown below

Quick explanation:

After the Init phase we have 2 loops

The inner loop which executes for T time steps (of the simulation)

is responsible for learning policies for each time step

while the outer loop

makes the agent repeat the learning process across multiple simulations (taking the learned policies from the previous states and applying them to new states)

My first Question/case is, assume I set the value of T (the total time steps for the simulation) to 1 (effectively removing the loop part of the inner loop), would it still count as DDPG or should it be considered something else.

My Second Question/case is, assume we treat the entire simulation as a single time step (again effective removing the loop element of the inner loop) would it still count as DDPG.

In Both cases the algorithm still remains online off-policy RL.

Thanks!