Hello, everyone.

I am studying the RNN, hoping to use RNN to make image classification.

I am puzzled about the data loading processing. Is an image loaded from top to bottom or from left to right? I am not sure how to set the sequence_length and input_size.

Thanks for help!

By default, let’s say you are using imagefolder, it uses PIL to load the images from the folder. You can override it. Why do you care about that? If you explain what is your task we can help better, RNN is too much generic!

Thanks so much.

Let me explain.

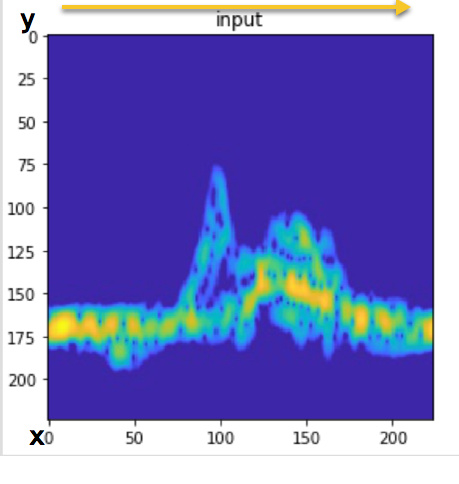

The images I want to classify are not the natural images. They are spectrograms, the x-axis corresponds to time, the y-axis corresponds to different frequencies. To classify this kinds of image I think the CNN is not appropriate, cause the spectrograms are not natural images like pictures of cats or dogs.

So I want to use RNN, specifically, the LSTM, to read the content of each image according to the time sequence (the arrow direction).

I see your point, but convnets work also on not natural data. Once you have the spectrogram, that’s a static image and if you have enough data (a representative amount for your task), you can use them. RNNs are for classifying sequences, for instance you feed them with every sample of your pcm signal and get as output a single label, or a label for every sample. Depending on the task, there are different kind of architectures. You may want to check the wavenet paper, there should be a nice pytorch implementation.

Thank you so much. Although convnets can classify this kind of spectrograms. But I want to try on RNN, cause I think the RNN can extract more information via processing each columns one by one. I think RNN is more suitable.

I want the RNN read each column at every time, but I don’t know how to do this after using imagefolder to load my dataset. Could you help me?

I would write a script to extract a feature vector from every column and use them to feed e.g. lstm. If you have one label for each spectrogram you should get the lstm output corresponding to the end of your sequence and compare to your label using a loss function so you can do backprop over time. That’s the basic approach.

Wow. That would be great.

Thank you very much for your help.