Hello! I’m new to PyTorch with CUDA and I’m trying to set it up on WSL. My CUDA version is 12.5, but the version matrix goes up to 12.4. Is this outdated or should I downgrade my CUDA for Pytorch to work?

Thanks a lot

Hello! I’m new to PyTorch with CUDA and I’m trying to set it up on WSL. My CUDA version is 12.5, but the version matrix goes up to 12.4. Is this outdated or should I downgrade my CUDA for Pytorch to work?

Thanks a lot

Your locally installed CUDA toolkit won’t be used unless you build PyTorch from source or a custom CUDA extension since the PyTorch binaries ship with its own CUDA dependencies.

I’m also having issues getting CUDA and PyTorch to work.

I have CUDA 12.5 installed and PyTorch 2.2 installed in my Anaconda environment, however when checking if my GPU is available it always returns FALSE.

I’m running this relatively simple script to check if available:

import torch

if torch.cuda.is_available():

print(“CUDA is available. GPU will be used.”)

device = torch.device(‘cuda’)

else:

print(“CUDA is not available. CPU will be used.”)

device = torch.device(‘cpu’)

print(f"Device being used: {device}")

tensor = torch.rand(3, 3).to(device)

print(tensor)

Very frustrating as I am trying to train a custom YOLO model on my GPU at present following updating my laptop to a i7-13800H with a RTX-4060 8GB GPU.

hello, I am trying to install the pytorch version compatible with cuda 12.5 but I have not been successful. cuda 12.1 is currently active on the website. But I cannot find a version compatible with 12.5. When I install 12.1, cuda is active but I get the following error.

import torch

torch.cuda.is_available()

/home/user/conda/lib/python3.10/site-packages/torch/cuda/init.py:118: UserWarning: CUDA initialization: Unexpected error from cudaGetDeviceCount(). Did you run some cuda functions before calling NumCudaDevices() that might have already set an error? Error 500: named symbol not found (Triggered internally at …/c10/cuda/CUDAFunctions.cpp:108.)

return torch._C._cuda_getDeviceCount() > 0

False

Translated with DeepL.com (free version)

hello, I am trying to install the pytorch version compatible with cuda 12.5 but I have not been successful. cuda 12.1 is currently active on the website. But I cannot find a version compatible with 12.5. When I install 12.1, cuda is active but I get the following error.

import torch

torch.cuda.is_available()

/home/user/conda/lib/python3.10/site-packages/torch/cuda/init.py:118: UserWarning: CUDA initialization: Unexpected error from cudaGetDeviceCount(). Did you run some cuda functions before calling NumCudaDevices() that might have already set an error? Error 500: named symbol not found (Triggered internally at …/c10/cuda/CUDAFunctions.cpp:108.)

return torch._C._cuda_getDeviceCount() > 0

False

You can install any PyTorch binary with CUDA support (11.8, 12.1, or 12.4) as long as your NVIDIA driver is properly installed and can communicate with your GPU, which does not seem to be the case.

We are sometimes seeing similar issues and just yesterday it seems a restart of the laptop was needed.

I changed my nvidia driver and my problem was solved, thank you

Just wanted to double down and confirm the restart worked for me… Kept checking if my GPU was being used through the torch commands in my notebook and it said False. Did everything, installed the torch, cuda 12.5, etc and it didn’t work until I read your message about the restart… then it said true ![]() .

.

i have pytorch version 2.2 and cuda 12.5. No gpu shown. tried restarting the laptop. still no difference. pls help.

UPDATE : I changed conda environment and used python venv and it worked.

Hello guys ![]()

I’m facing a similar situation here. I purchased a RTX 4080 SUPER which was released earlier this year. Its cuda 12.5 out of the box.

Tried having PyTorch recognize gpu : fail

Tried downgrading the driver in order to be able to use 12.4 or earlier : fail - no such drivers exists for this gpu.

Had to replace the gpu by a RTX 3080 instead… Wanted to use the more recent one but hitting a wall.

Any ideas ?

Update : We did install the cuda toolkits and even the toolkit is unable to provide a suitable driver for the RTX 4080 SUPER, its not recognizing the gpu

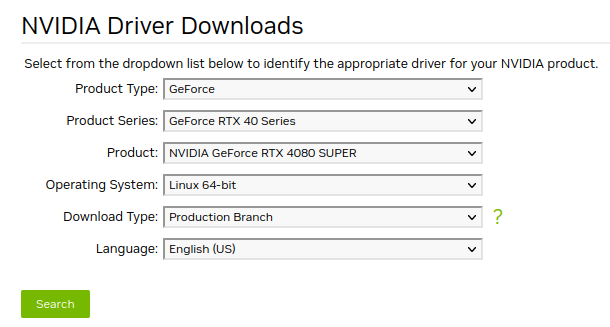

That’s not true and you can install any recent driver, such as 550.90.07 , by selecting the right GPU here:

It seems you are facing more setup issues unrelated to PyTorch so make sure your system is able to recognize and use the GPU before installing PyTorch.

Perfect. Will have to go as you say. If its supposed to work, we should be able to figure it out ![]()

Hello, I have a NVIDIA RTX A500 Laptop GPU and installed CUDA 12.5 (nvcc --version). In my conda environment I have pytorch 2.3.1+cpu but when running torch.cuda.is_available() it still returns false. How would I go about fixing this?

Which is expected since you’ve installed the CPU-only binary. Install a PyTorch binary with CUDA runtime dependencies instead.

hello i have cuda 12.5 which pytorch version i can install ?

Take a look at my previous post: Does Pytorch work with CUDA 12.5? - #6 by ptrblck

I don’t think it’s possible to successfully use the CUDA12.5 version of PyTorch at this time.

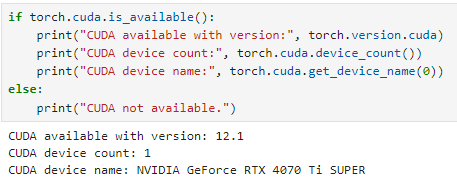

Recently, I got a RTX4070 ti S GPU, and I installed 555.85 driver. I have encountered a lot of trouble since I tried to use latest version CUDA(12.5) to enable my pytorch. And, CUDA12.4 got the same problem.

The problem is:

Pytorch docs offer command to install pytorch preview(nightly) using cuda12.4. It does not work for me. My host cuda version is 12.5.

The solution is to downgrade the latest cuda12.5 to cuda12.1 which support stable(2.3.1) pytorch:

conda install nvidia/label/cuda-12.1.1::cuda-toolkit

conda install pytorch torchvision torchaudio pytorch-cuda=12.1 -c pytorch -c nvidia

finally, I am able to use the cuda version pytorch on the relatively new GPU.

If someone manage to get the pytorch work with CUDA12.5, please hit me. Thanks a lot!!!

I was running into the same problems. I was able to solve this by:

pip uninstall torch torchvision torchaudio

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu124

Afterwards, it was able to recognize my NVidia 4090 and I could use it.

Hi @samaegrii,

could you elaborate how you changed drivers. is it properly communicate?

I run pop os 22.04 LTS. cuda==12.5 and not finding any compatable resources.followed few blogs but didn’t helped.