When I want to script a method which calls torch.nn.dropout, it comes up a error message:

RuntimeError:

unknown builtin op:

"""

Chunking.

Parameters

----------

z_in : ``torch.LongTensor``, required.

The output of the character-level lstms.

"""

z_in = self.drop_(z_in)

~~~~~~~~~ <--- HERE

out = self.chunk_layer(z_in).squeeze(1)

return out

self.drop = torch.nn.Dropout(p=droprate)

@torch.jit.script

def chunking(self, z_in):

"""

Chunking.

Parameters

----------

z_in : ``torch.LongTensor``, required.

The output of the character-level lstms.

"""

z_in = self.drop(z_in)

out = self.chunk_layer(z_in).squeeze(1)

return out

1 Like

Yes, dropout should be supported:

class MyModel(torch.jit.ScriptModule):

def __init__(self):

super(MyModel, self).__init__()

self.fc1 = nn.Linear(10, 10)

self.drop = nn.Dropout()

@torch.jit.script_method

def forward(self, x):

x = self.fc1(x)

x = self.drop(x)

return x

model = MyModel()

x = torch.randn(1, 10)

output = model(x)

traced_model = torch.jit.trace(model, x)

traced_model(x)

Could you post a code snippet to reproduce this issue?

1 Like

Thank you for your reply. I have solved it because I found it should be @torch.jit.script_method rather than @torch.jit.script . Thank you again!

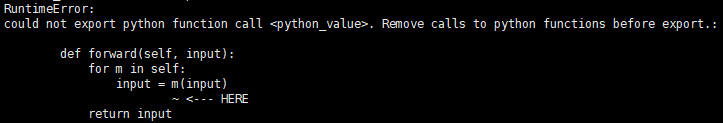

I have another question: when I use the sequential container, it will tell me a confusing error message with this:

But the

forward function doesn’t appear in my code. My PyTorch version is 1.0.0.

I think this answer might help.

But the point is that I don’t write this forward function. It seems like the function is in the sequential container.

How are you scripting the module?

As you can see in the linked example, the nn.Sequential module is wrapped inside a torch.jit.ScriptModule.

I am using the torch.jit.ScriptModule too. Mine is like that:

class NER(torch.jit.ScriptModule):

__constants__ = ['rnn_outdim', 'one_direction_dim', 'add_proj']

def __init__(self, rnn,

w_num: int,

w_dim: int,

c_num: int,

c_dim: int,

y_dim: int,

y_num: int,

droprate: float):

super(NER, self).__init__()

...

if self.add_proj:

...

self.chunk_layer = nn.Sequential(self.to_chunk, self.drop, self.to_chunk_proj, self.drop, self.chunk_weight)

self.type_layer = nn.Sequential(self.to_type, self.drop, self.to_type_proj, self.drop, self.type_weight)

else:

...

self.chunk_layer = nn.Sequential(self.to_chunk, self.drop, self.chunk_weight)

self.type_layer = nn.Sequential(self.to_type, self.drop, self.type_weight)