I was wondering if torch.load which returns the state_dict reads and loads it directly in memory or simply reads the saved state_dict and returns a copy of it?

Basically I want to load the weights of a saved model and modify them without messing with the original weights.

I understand that I could create a replicate of the weights by copying them from state_dict modifying and then loading it to the model.

But, is this the recommended way?

Is there any chance that torch.load which returns state_dict operates like .detach() where it returns a reference to the weights and whatever changes we make there will be automatically reflected in the stored state_dict?

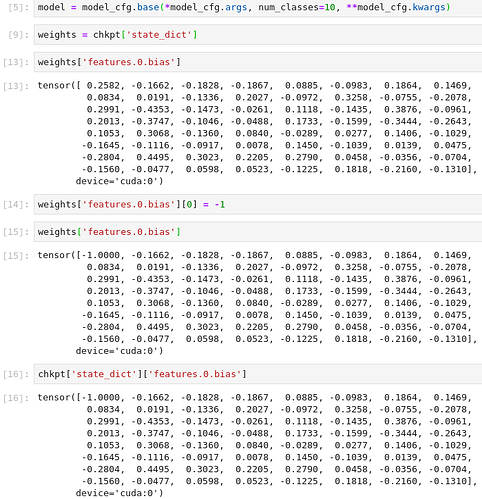

For instance,

chkpt = torch.load(path)

model = vgg()

replica_weights = chkpt['state_dict']

replica_weights += 1.24

model.load_state_dict(replica_weights)

In the above example will the changes in replica_weightsshow up in chkpt['state_dict'], in other words do they share the same memory space? Is replica_weights a copy of chkpt['state_dict'] or a reference pointing in the same memory space?