Hi everyone! I have found something in PyTorch’s documentation that challenges my view on the computation that multi-layer RNNs are supposed to do. I would greatly appreciate it if you could correct me if I don’t understand it properly.

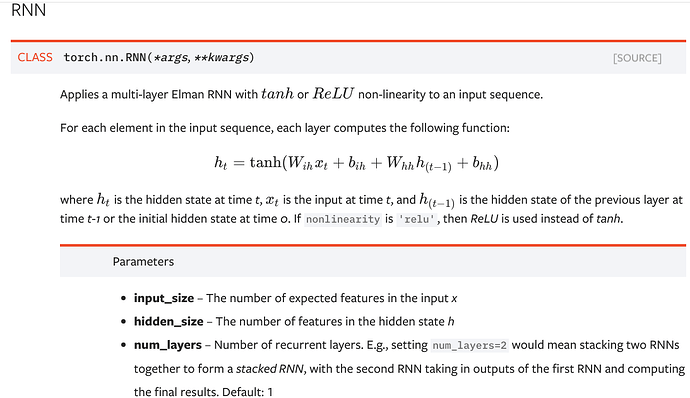

In RNN’s documentation, it is said that each layer receives the input and h_(t-1), which is “the hidden state of the previous layer at time t-1”.

I understand that, for the first layer, x_t is the original input, and for the rest, x_t is the current hidden state of the previous layer.

However, regarding h_(t-1), isn’t it a bit weird to receive the previous hidden state of the previous layer? Shouldn’t it receive its own previous hidden state? Also, this definition does not seem to be compatible with single-layer RNNs.

From this post: What is num_layers in RNN module? - #2 by SimonW I understand that it uses its own previous hidden state, not the previous hidden state of the previous layer.

Many thanks in advance.