Hi,

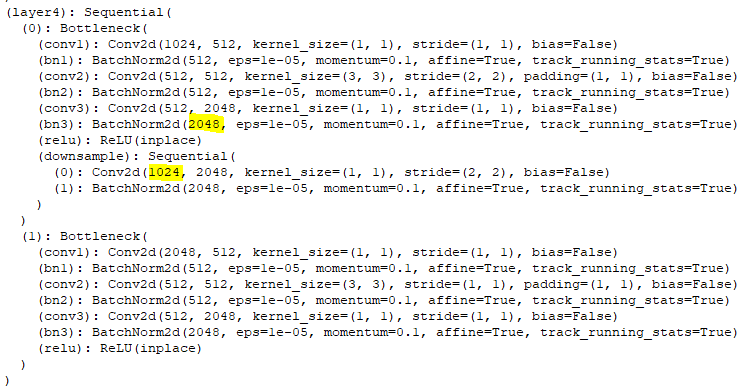

the following picture is a snippet of resnet 18 structure. I got confused about the dimensions. I thought the input size of a layer should be the same as the output size of the previous layer. I wonder those highlighted numbers, shouldn’t have the same value?

you should take a look at the logic of the forward function, the structure of layers do not represent the flow of tensors.

1 Like

this is the forward path:

def forward(self, x): x = self.conv1(x) x = self.bn1(x) x = self.relu(x) x = self.maxpool(x) x = self.layer1(x) x = self.layer2(x) x = self.layer3(x) x = self.layer4(x) x = self.avgpool(x) x = x.view(x.size(0), -1) x = self.fc(x) return x

it seems the snippet(layer4) will run as it is.

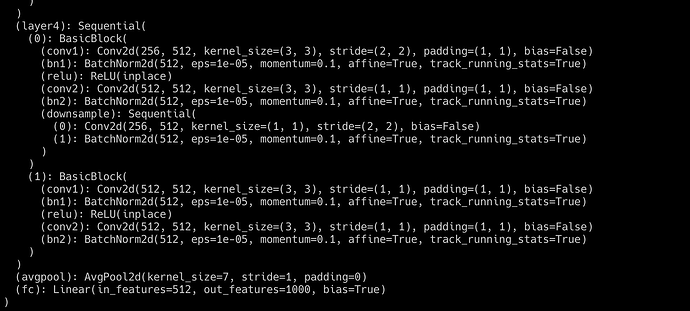

This is mine

resnet18 output, did you use the resnet18 from torchvision or some other implementation?

I took it from here:

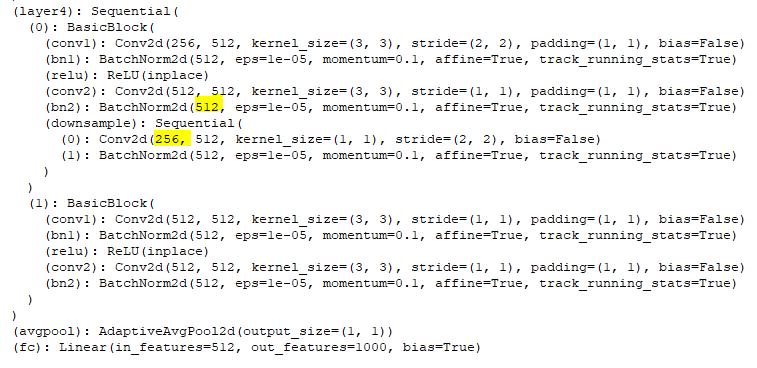

apparently, I mistakenly initialize resnet with Bottleneck instead of BasicBlock. However, the problem is still there:

As @chenglu said, the forward logic might differ from the order of the stored modules.

If you look at this line of code, you’ll see, that self.downsample is appiled on x, which differs from the output of self.bn2.

2 Likes