Hi,all

I’m trying to re-implement some network to classify SVHN data according to this paper.

‘Dropout: A Simple Way to Prevent Neural Networks from Overfitting’ (http://jmlr.org/papers/volume15/srivastava14a.old/srivastava14a.pdf)

In this paper, I have 2 questions.

-

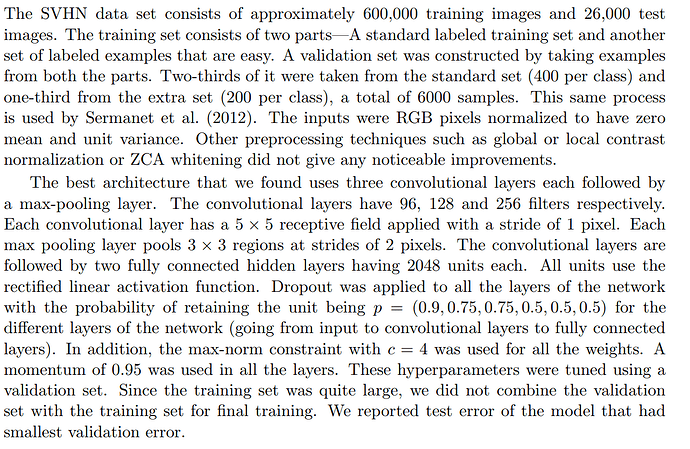

This paper said they use 3 conv layer and 2 fc layer. but the number of dropout p is 6. (0.9, 0.75, 0.75, 0.5, 0.5, 0.5).

Can anyone explain about this ? -

What is the max-norm constarint and how can use this in pytorch?