My code is on the public repo here: GitHub - Johnmakuta/NeuralTrauma: Project to simulate the degradation of performance of a Spiking Neural Network (SNN) under the consequences of a hardware attack.

I have a setup with dual 3090tis with an NVlink bridge between them. I am getting this weird behavior when I run my eval_net_Rleaky.ipynb where when I’m running my model evaluation block it seeminly is running the code on one GPU and using the memory of the other.

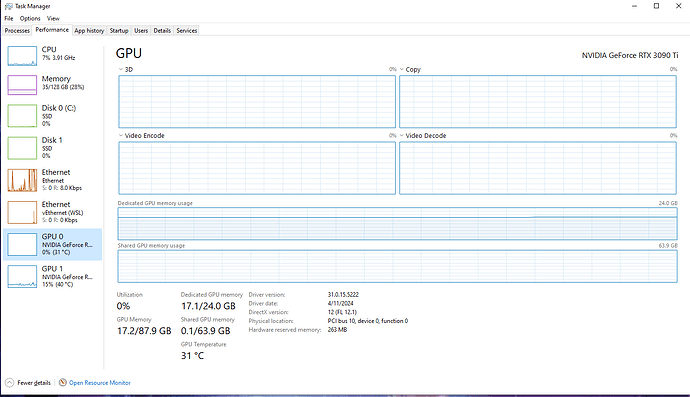

In task manager, it shows one GPU at 14-16% utilization consistently, but showed next to now vram usage, but on the other GPU shows 0% utilization but it’s vram is continually increasing as the code runs.

Is this happening because of NVlink? I haven’t really delved into it since building the PC but I remember reading somewhere that NVlink when utilizing a single GPU could utilize the vram from the other GPU as it’s own but I thought that behavior had to be setup explicitly.