I am trying to find the most efficient way to define a custom connectivity scheme between layers.

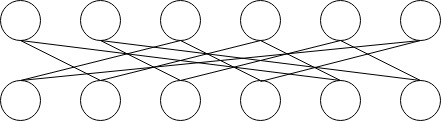

For example, below there are n input neurons and they each connect to every third neuron ±3 from themselves and fan out k steps.

Previous examples on how to do this involved defining a mask and setting the weights of this mask to zero each forward pass. My hesitance for this method is that the above could end up being very sparse and generating the connections for each forward pass itself could take up a lot of memory itself for large networks.

Is there a way to programmatically and efficiently define custom connections between FC layers?

A similar question before by @jukiewiczm is Gradient masking in register_backward_hook for custom connectivity - efficient implementation.