Hi, PyTorch community!

This is my first post, so please bear with me. I have been working with Network Simulator (NS-3), a C++ code base and the project was built using CMake. I am calling “import torch” using embedding Python Interpreter as follows:

Py_Initialize();

PyGILState_STATE gilState = PyGILState_Ensure(); // Get the GIL

PyRun_SimpleString("import torch");

PyRun_SimpleString("torch.__version__");

PyGILState_Release(gilState); // Release the GIL

The above code is part of a file called “tcp-madras.cc”, and a dynamic library called “libinternet.so” gets built using CMake.

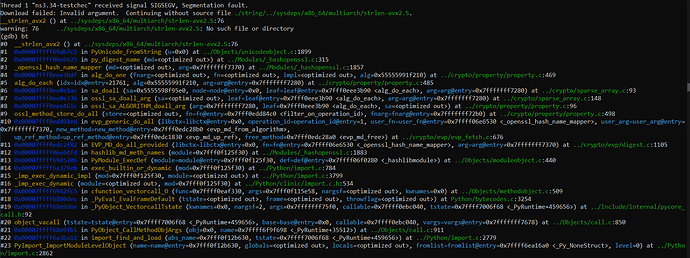

When I run the main() executable that includes “libinternet.so” the program throws a Segmentation Fault. I did some debugging using gdb and found the following:

Please note that everything works fine if I call “import torch” using a Python interpreter from the terminal.

I would be happy if someone could point me in the right direction to resolve this issue.

Environment:

# PyTorch Version: ‘2.5.0+cu124’

# Python version: 3.12.3

# OS: Windows 10

# WSL version: 2.3.24.0

# CUDA/cuDNN version: 12.6.77

# GPU: RTX 4060