I’m grad-checking a model, and seem to have traced a broken gradient to the embeddings I was plugging in.

Here is a minimal example of my code… any thoughts welcome.

import torch.nn as nn

from torch.nn.utils.rnn import pack_padded_sequence, pad_packed_sequence

from torch.autograd.gradcheck import gradcheck

from torch.autograd import Variable

import torch

def testGradCheckEmbeddingBasic(self):

seqs = ['ghatmasala', 'nicela', 'c-pakodas']

e = nn.Embedding(10, 3, sparse=False).double()

indices = Variable(torch.LongTensor([[1], [4]]))

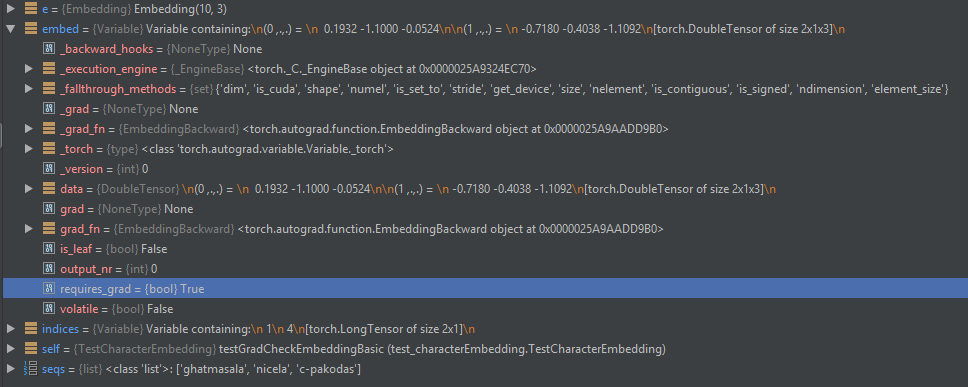

embed = e(indices)

print(embed)

input = (embed, )

model = nn.Linear(3, 3).double()

test = gradcheck(model, input, eps=1e-6, atol=1e-4)

Error I get is…

Variable containing:

(0 ,.,.) =

-1.7624 0.1646 -0.5719

(1 ,.,.) =

-0.5188 -0.2282 1.3176

[torch.DoubleTensor of size 2x1x3]

Ran 1 test in 0.232s

FAILED (errors=1)

Error

Traceback (most recent call last):

File “C:\Users\ZEBEAST\Anaconda3\envs\pytorch\lib\unittest\case.py”, line 59, in testPartExecutor

yield

File “C:\Users\ZEBEAST\Anaconda3\envs\pytorch\lib\unittest\case.py”, line 605, in run

testMethod()

File “C:\Users\ZEBEAST\PycharmProjects\sauron\tests\test_characterEmbedding.py”, line 51, in testGradCheckEmbeddingBasic

test = gradcheck(model, input, eps=1e-6, atol=1e-4)

File “C:\Users\ZEBEAST\Anaconda3\envs\pytorch\lib\site-packages\torch\autograd\gradcheck.py”, line 181, in gradcheck

return fail_test(‘for output no. %d,\n numerical:%s\nanalytical:%s\n’ % (j, numerical, analytical))

File “C:\Users\ZEBEAST\Anaconda3\envs\pytorch\lib\site-packages\torch\autograd\gradcheck.py”, line 166, in fail_test

raise RuntimeError(msg)

RuntimeError: for output no. 0,

numerical:(

0.3081 -0.1945 -0.0135 0.0000 0.0000 0.0000

-0.0716 0.3882 0.2380 0.0000 0.0000 0.0000

0.3901 -0.1542 0.5559 0.0000 0.0000 0.0000

0.0000 0.0000 0.0000 0.3081 -0.1945 -0.0135

0.0000 0.0000 0.0000 -0.0716 0.3882 0.2380

0.0000 0.0000 0.0000 0.3901 -0.1542 0.5559

[torch.FloatTensor of size 6x6]

,)

analytical:(

0 0 0 0 0 0

0 0 0 0 0 0

0 0 0 0 0 0

0 0 0 0 0 0

0 0 0 0 0 0

0 0 0 0 0 0

[torch.FloatTensor of size 6x6]

,)