Hello,

I’m a teacher who is studying computer vision for months. I was very excited when I was able to train my first object detection model using Detectron2’s Faster R-CNN model. And it works like a charm! Super cool! Thanks PyTorch.

But the problem is that, in order to increase the accuracy, I used the largest model in the model zoo.

Now I want to deploy this as something people can use to ease their job. But, the model is so large that it takes ~10 seconds to infer a single image on my CPU which is Intel i7-8750h.

Therefore, it’s really difficult to deploy this model even on a regular cloud server. I need to use either GPU servers or latest model CPU servers which are really expensive and I’m not sure if I can even compensate for server expenses for months.

I need to make it smaller and faster for deployment.

So, yesterday I found that there’s something like pruning the model!! I was very excited (since I’m not a computer or data scientists, don’t blame me (((: )

I read official pruning documentation of PyTorch, but it’s really difficult for me to understand.

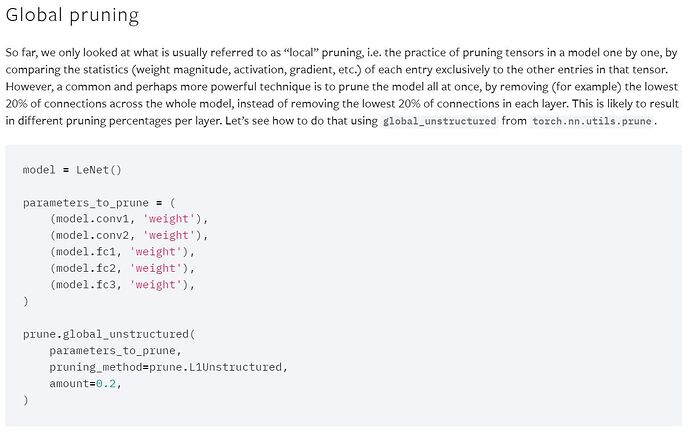

I found global pruning is of the easiest one to do.

But the problem is, I have no idea what parameters should I write to prune.

Like I said, I used Faster R-CNN X-101 model. I have it as “model_final.pth”. And it uses Base RCNN FPN.yaml and its meta architecture is “GeneralizedRCNN”.

It seems like an easy configuration to do. But like I said, since it’s not my field it’s very hard for a person like me.

I’d be more than happy if you could help me on this step by step.

I’m leaving my cfg.yaml which I used training the model and I saved it using “dump” method in Detectron2 config class just in case. Here’s the Drive link.

Thank you very much in advance.