I’m working on a classification problem (500 classes). My NN has 3 fully connected layers, followed by an LSTM layer. I use nn.CrossEntropyLoss() as my loss function. To tackle the problem of class imbalance, I use sklearn’s class_weight while initialising the loss

from sklearn.utils import class_weight

class_weights = class_weight.compute_class_weight(class_weight='balanced', classes=np.unique(y_org), y=y_org)

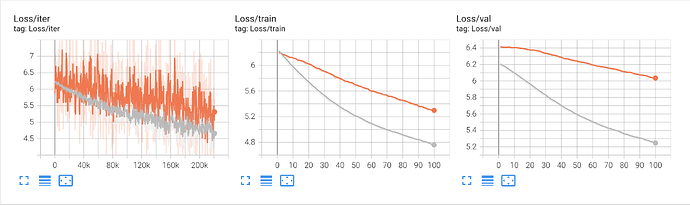

The problem I’m facing is that, without class weighing, the iteration loss decreases gracefully and reaches a certain minimal value. Upon adding class weights, the iteration loss shows several oscillations and the overall validation loss doesn’t reduce to as low a value as it used to.

In the below diagram, the orange plot is while training without weights

Is there any explanation for this behaviour?