Hi,

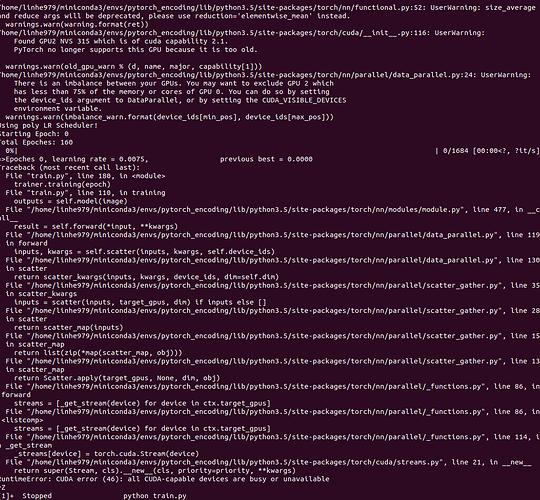

I need to use synchronized BatchNorm so I downloaded the project from https://github.com/zhanghang1989/PyTorch-Encoding and runned their train.py to test if everything works fine. The error “all cuda_capable devices are busy or unavailable” appears as soon as the training procedure starts, i’m not sure what the error is depending on. I’m using a remote server and can not reset GPUs by myself for the moment, they are pretty empty. Here are some information about my environment:

Ubuntu: 16.04

cuda: 8.0

Pytorch: 0.4.1

gcc: 5.5

gpu compute mode: default