class FC(nn.Module):

def __init__(self, opt):

super(FC, self).__init__()

self.encoder = nn.Embedding(opt.VOCAB_SIZE, opt.EMBEDDING_DIM)

self.gru = nn.Sequential(

nn.GRU(input_size=100,hidden_size=opt.LINER_HID_SIZE),

nn.ReLU(False),

)

self.fc_1 = nn.Sequential(

nn.Linear(10000, opt.LINER_HID_SIZE),

#nn.BatchNorm1d(opt.LINER_HID_SIZE),

nn.ReLU(False),

nn.Linear(opt.LINER_HID_SIZE, opt.NUM_CLASS_1*10),

nn.ReLU(False),

nn.Linear(opt.NUM_CLASS_1*10, opt.NUM_CLASS_1),

nn.Dropout(0.5),

)

self.fc_2 = nn.Sequential(

nn.Linear(10000, opt.LINER_HID_SIZE),

#nn.BatchNorm1d(opt.LINER_HID_SIZE),

nn.ReLU(False),

nn.Linear(opt.LINER_HID_SIZE, opt.NUM_CLASS_2*10),

nn.ReLU(False),

nn.Linear(opt.NUM_CLASS_2*10, opt.NUM_CLASS_2),

nn.Dropout(0.5),

)

self.fc_3 = nn.Sequential(

nn.Linear(10000, opt.LINER_HID_SIZE),

#nn.BatchNorm1d(opt.LINER_HID_SIZE),

nn.ReLU(False),

nn.Linear(opt.LINER_HID_SIZE, opt.NUM_CLASS_3*10),

nn.ReLU(False),

nn.Linear(opt.NUM_CLASS_3*10, opt.NUM_CLASS_3),

nn.Dropout(0.5),

)

def forward(self, x):

x = x.long()

outputs = self.encoder(x)

outputs = outputs.long()

outputs = self.gru(outputs)

outputs = outputs.view(outputs.size()[0], -1)

output_1 = self.fc_1(outputs)

output_2 = self.fc_2(outputs)

output_3 = self.fc_3(outputs)

return (output_1, output_2, output_3)

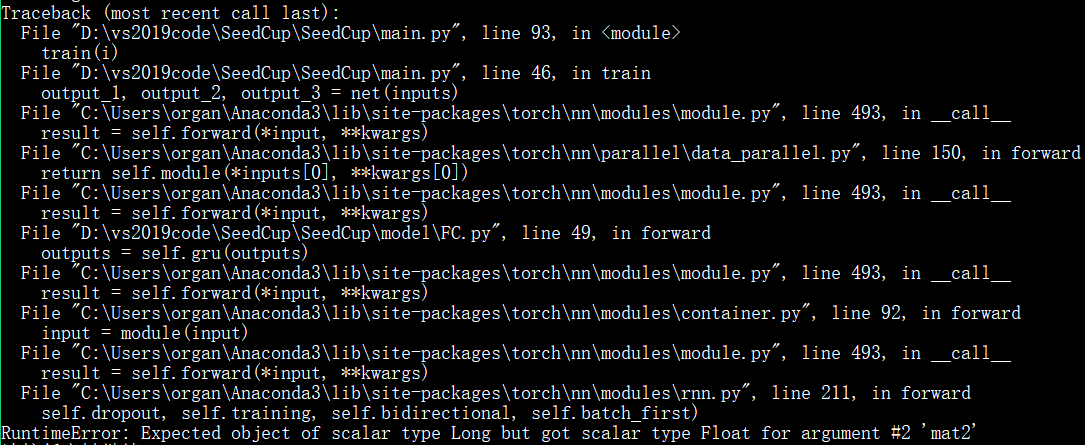

I consider that I have just transformed the ‘output’ to LongTensor but there is also an error 'expected object of scalar type Long but got scalar type Float for argument #2 ‘mat2’ ', I am confused and could not solve the problem, please help me!