I am trying to train a model for image segmentation. I have 3 different class to segment whihc is denoted by [0,1,2] in the ground truth image. These are the output from different steps:

Model Output : torch.Size([5, 3, 120, 160]) #batch,channel,height,width

Argmax Output : torch.Size([5, 120, 160]) #check maximum along the channel axis

Ground Truth : torch.Size([5, 120, 160])

I am using below code snippet to find the loss using CrossEntropy

loss_seg = criterion_seg(x, label)

#x = output from argmax

#label = ground truth

While doing so I am getting below error

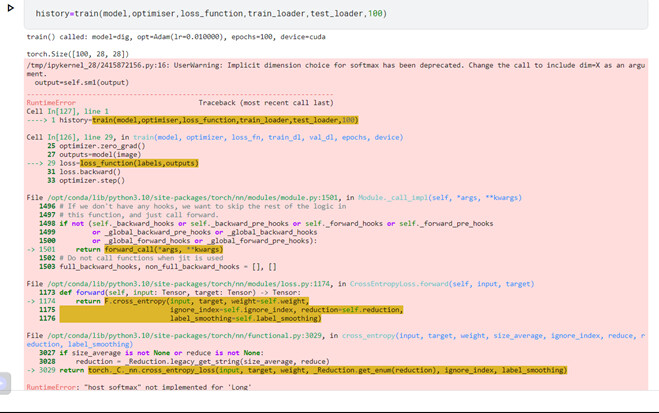

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

<ipython-input-40-e1fd37d7abc6> in <module>

8

9 print("epoch",epoch)

---> 10 train_loss, model = train_model(model, "seg", seg_train_loader, criterion_blob, criterion_seg, optimizer, avDev)

11 loss_details[epoch] = train_loss

12 print("train loss",train_loss)

<ipython-input-29-f9e95c6cbb49> in train_model(model, mode, train_loader, criterion_blob, criterion_seg, optimizer, device)

36 # print(label.size())

37 optimizer.zero_grad()

---> 38 loss_seg = criterion_seg(torch.tensor(x, dtype=torch.long, device=device), label)

39

40

~/anaconda3/lib/python3.7/site-packages/torch/nn/modules/module.py in __call__(self, *input, **kwargs)

539 result = self._slow_forward(*input, **kwargs)

540 else:

--> 541 result = self.forward(*input, **kwargs)

542 for hook in self._forward_hooks.values():

543 hook_result = hook(self, input, result)

~/anaconda3/lib/python3.7/site-packages/torch/nn/modules/loss.py in forward(self, input, target)

914 def forward(self, input, target):

915 return F.cross_entropy(input, target, weight=self.weight,

--> 916 ignore_index=self.ignore_index, reduction=self.reduction)

917

918

~/anaconda3/lib/python3.7/site-packages/torch/nn/functional.py in cross_entropy(input, target, weight, size_average, ignore_index, reduce, reduction)

2007 if size_average is not None or reduce is not None:

2008 reduction = _Reduction.legacy_get_string(size_average, reduce)

-> 2009 return nll_loss(log_softmax(input, 1), target, weight, None, ignore_index, None, reduction)

2010

2011

~/anaconda3/lib/python3.7/site-packages/torch/nn/functional.py in log_softmax(input, dim, _stacklevel, dtype)

1315 dim = _get_softmax_dim('log_softmax', input.dim(), _stacklevel)

1316 if dtype is None:

-> 1317 ret = input.log_softmax(dim)

1318 else:

1319 ret = input.log_softmax(dim, dtype=dtype)

RuntimeError: "host_softmax" not implemented for 'Long'

Kindly help me to get rid of this error.

TIA