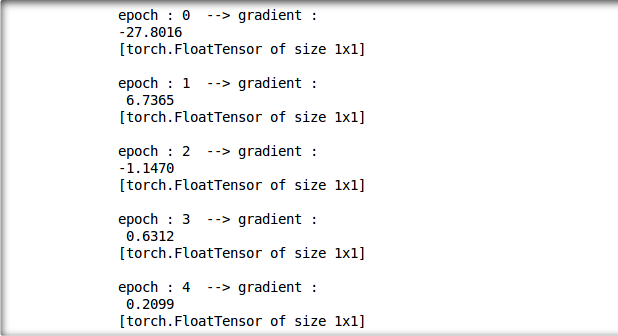

I new to pytorch ,so Implementing a simple linear regression .In the main for loop of epochs,i am taking the gradient of parameter and appending it to a python list.but in the list i am getting a single value repeated to the length of the list,and that value is the gradient value for last epoch.but instead of appending it to a list when i am printing it,its giving correct value.

grad_weight=

for epoch in range(250):

#1.Clear gradients w.r.t parameters

optimizer.zero_grad()

outputs=model(inputs)

loss=criterion(outputs,labels)

loss.backward()

grad=model.linear.weight.grad.data

print(“epoch :”,epoch," → gradient :",grad)

grad_weight.append(grad)

optimizer.step()

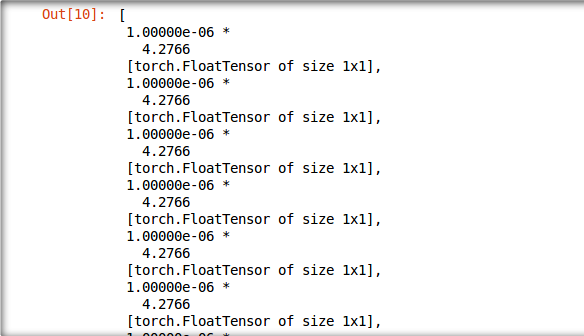

but the contain of the list is like that

grad_weight