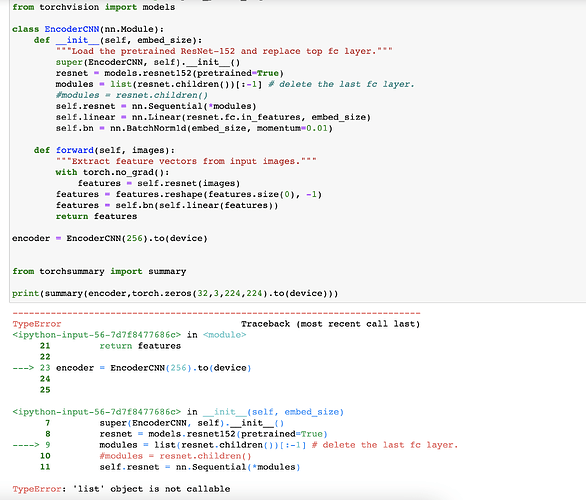

That’s strange; I cannot reproduce this with

a = torchvision.models.resnet152()

b = list(a.children())[:-1]

Can you check if that works on your system?

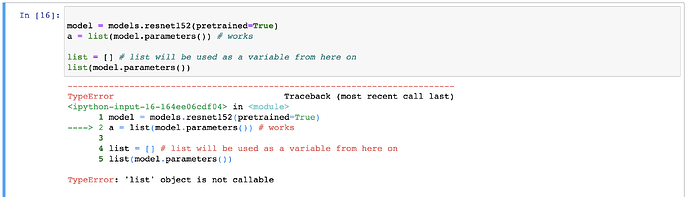

Could you check, if you have (accidentally) overridden the list function as seen here?

a = list(model.parameters()) # works

list = [] # list will be used as a variable from here on

list(model.parameters())

> > TypeError: 'list' object is not callable

Hi! Thanks for the quick response. @ptrblck it replicates the same error (see screenshot)

replicates the same error. It seems @ptrblck might be right and somehow the list function is overridden. I’m not sure how to fix that…or where in my code that could happen.

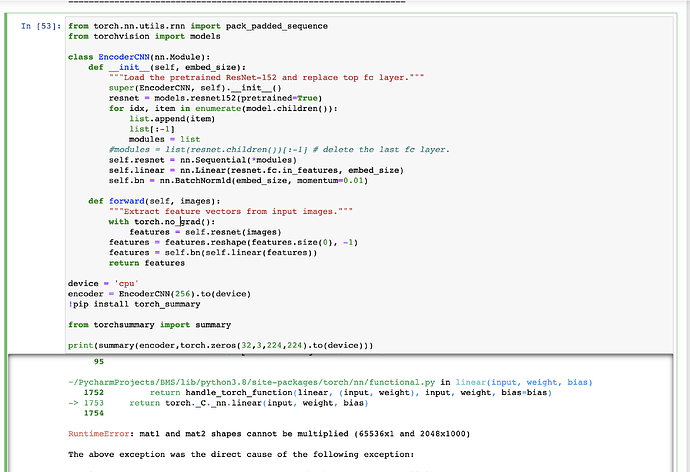

The above work-around seems to be doing what I wanted, but now it throws a new error: RuntimeError: mat1 and mat2 shapes cannot be multiplied (65536x1 and 2048x1000).

The entire trace:

Requirement already satisfied: torch_summary in ./lib/python3.8/site-packages (1.4.5)

RuntimeError Traceback (most recent call last)

~/PycharmProjects/BMS/lib/python3.8/site-packages/torchsummary/torchsummary.py in summary(model, input_data, batch_dim, branching, col_names, col_width, depth, device, dtypes, verbose, *args, **kwargs)

139 with torch.no_grad():

→ 140 _ = model.to(device)(*x, *args, **kwargs) # type: ignore[misc]

141 except Exception as e:

~/PycharmProjects/BMS/lib/python3.8/site-packages/torch/nn/modules/module.py in _call_impl(self, *input, **kwargs)

888 else:

→ 889 result = self.forward(*input, **kwargs)

890 for hook in itertools.chain(

in forward(self, images)

20 with torch.no_grad():

—> 21 features = self.resnet(images)

22 features = features.reshape(features.size(0), -1)

~/PycharmProjects/BMS/lib/python3.8/site-packages/torch/nn/modules/module.py in _call_impl(self, *input, **kwargs)

888 else:

→ 889 result = self.forward(*input, **kwargs)

890 for hook in itertools.chain(

~/PycharmProjects/BMS/lib/python3.8/site-packages/torch/nn/modules/container.py in forward(self, input)

118 for module in self:

→ 119 input = module(input)

120 return input

~/PycharmProjects/BMS/lib/python3.8/site-packages/torch/nn/modules/module.py in _call_impl(self, *input, **kwargs)

888 else:

→ 889 result = self.forward(*input, **kwargs)

890 for hook in itertools.chain(

~/PycharmProjects/BMS/lib/python3.8/site-packages/torch/nn/modules/linear.py in forward(self, input)

93 def forward(self, input: Tensor) → Tensor:

—> 94 return F.linear(input, self.weight, self.bias)

95

~/PycharmProjects/BMS/lib/python3.8/site-packages/torch/nn/functional.py in linear(input, weight, bias)

1752 return handle_torch_function(linear, (input, weight), input, weight, bias=bias)

→ 1753 return torch._C._nn.linear(input, weight, bias)

1754

RuntimeError: mat1 and mat2 shapes cannot be multiplied (65536x1 and 2048x1000)

The above exception was the direct cause of the following exception:

RuntimeError Traceback (most recent call last)

in

30 from torchsummary import summary

31

—> 32 print(summary(encoder,torch.zeros(32,3,224,224).to(device)))

~/PycharmProjects/BMS/lib/python3.8/site-packages/torchsummary/torchsummary.py in summary(model, input_data, batch_dim, branching, col_names, col_width, depth, device, dtypes, verbose, *args, **kwargs)

141 except Exception as e:

142 executed_layers = [layer for layer in summary_list if layer.executed]

→ 143 raise RuntimeError(

144 "Failed to run torchsummary. See above stack traces for more details. "

145 “Executed layers up to: {}”.format(executed_layers)

RuntimeError: Failed to run torchsummary. See above stack traces for more details. Executed layers up to: [Conv2d: 2-1, BatchNorm2d: 2-2, ReLU: 2-3, MaxPool2d: 2-4, Sequential: 2-5, Bottleneck: 3-1, Conv2d: 4-1, BatchNorm2d: 4-2, ReLU: 4-3, Conv2d: 4-4, BatchNorm2d: 4-5, ReLU: 4-6, Conv2d: 4-7, BatchNorm2d: 4-8, Sequential: 4-9, ReLU: 4-10, Bottleneck: 3-2, Conv2d: 4-11, BatchNorm2d: 4-12, ReLU: 4-13, Conv2d: 4-14, BatchNorm2d: 4-15, ReLU: 4-16, Conv2d: 4-17, BatchNorm2d: 4-18, ReLU: 4-19, Bottleneck: 3-3, Conv2d: 4-20, BatchNorm2d: 4-21, ReLU: 4-22, Conv2d: 4-23, BatchNorm2d: 4-24, ReLU: 4-25, Conv2d: 4-26, BatchNorm2d: 4-27, ReLU: 4-28, Sequential: 2-6, Bottleneck: 3-4, Conv2d: 4-29, BatchNorm2d: 4-30, ReLU: 4-31, Conv2d: 4-32, BatchNorm2d: 4-33, ReLU: 4-34, Conv2d: 4-35, BatchNorm2d: 4-36, Sequential: 4-37, ReLU: 4-38, Bottleneck: 3-5, Conv2d: 4-39, BatchNorm2d: 4-40, ReLU: 4-41, Conv2d: 4-42, BatchNorm2d: 4-43, ReLU: 4-44, Conv2d: 4-45, BatchNorm2d: 4-46, ReLU: 4-47, Bottleneck: 3-6, Conv2d: 4-48, BatchNorm2d: 4-49, ReLU: 4-50, Conv2d: 4-51, BatchNorm2d: 4-52, ReLU: 4-53, Conv2d: 4-54, BatchNorm2d: 4-55, ReLU: 4-56, Bottleneck: 3-7, Conv2d: 4-57, BatchNorm2d: 4-58, ReLU: 4-59, Conv2d: 4-60, BatchNorm2d: 4-61, ReLU: 4-62, Conv2d: 4-63, BatchNorm2d: 4-64, ReLU: 4-65, Bottleneck: 3-8, Conv2d: 4-66, BatchNorm2d: 4-67, ReLU: 4-68, Conv2d: 4-69, BatchNorm2d: 4-70, ReLU: 4-71, Conv2d: 4-72, BatchNorm2d: 4-73, ReLU: 4-74, Bottleneck: 3-9, Conv2d: 4-75, BatchNorm2d: 4-76, ReLU: 4-77, Conv2d: 4-78, BatchNorm2d: 4-79, ReLU: 4-80, Conv2d: 4-81, BatchNorm2d: 4-82, ReLU: 4-83, Bottleneck: 3-10, Conv2d: 4-84, BatchNorm2d: 4-85, ReLU: 4-86, Conv2d: 4-87, BatchNorm2d: 4-88, ReLU: 4-89, Conv2d: 4-90, BatchNorm2d: 4-91, ReLU: 4-92, Bottleneck: 3-11, Conv2d: 4-93, BatchNorm2d: 4-94, ReLU: 4-95, Conv2d: 4-96, BatchNorm2d: 4-97, ReLU: 4-98, Conv2d: 4-99, BatchNorm2d: 4-100, ReLU: 4-101, Sequential: 2-7, Bottleneck: 3-12, Conv2d: 4-102, BatchNorm2d: 4-103, ReLU: 4-104, Conv2d: 4-105, BatchNorm2d: 4-106, ReLU: 4-107, Conv2d: 4-108, BatchNorm2d: 4-109, Sequential: 4-110, ReLU: 4-111, Bottleneck: 3-13, Conv2d: 4-112, BatchNorm2d: 4-113, ReLU: 4-114, Conv2d: 4-115, BatchNorm2d: 4-116, ReLU: 4-117, Conv2d: 4-118, BatchNorm2d: 4-119, ReLU: 4-120, Bottleneck: 3-14, Conv2d: 4-121, BatchNorm2d: 4-122, ReLU: 4-123, Conv2d: 4-124, BatchNorm2d: 4-125, ReLU: 4-126, Conv2d: 4-127, BatchNorm2d: 4-128, ReLU: 4-129, Bottleneck: 3-15, Conv2d: 4-130, BatchNorm2d: 4-131, ReLU: 4-132, Conv2d: 4-133, BatchNorm2d: 4-134, ReLU: 4-135, Conv2d: 4-136, BatchNorm2d: 4-137, ReLU: 4-138, Bottleneck: 3-16, Conv2d: 4-139, BatchNorm2d: 4-140, ReLU: 4-141, Conv2d: 4-142, BatchNorm2d: 4-143, ReLU: 4-144, Conv2d: 4-145, BatchNorm2d: 4-146, ReLU: 4-147, Bottleneck: 3-17, Conv2d: 4-148, BatchNorm2d: 4-149, ReLU: 4-150, Conv2d: 4-151, BatchNorm2d: 4-152, ReLU: 4-153, Conv2d: 4-154, BatchNorm2d: 4-155, ReLU: 4-156, Bottleneck: 3-18, Conv2d: 4-157, BatchNorm2d: 4-158, ReLU: 4-159, Conv2d: 4-160, BatchNorm2d: 4-161, ReLU: 4-162, Conv2d: 4-163, BatchNorm2d: 4-164, ReLU: 4-165, Bottleneck: 3-19, Conv2d: 4-166, BatchNorm2d: 4-167, ReLU: 4-168, Conv2d: 4-169, BatchNorm2d: 4-170, ReLU: 4-171, Conv2d: 4-172, BatchNorm2d: 4-173, ReLU: 4-174, Bottleneck: 3-20, Conv2d: 4-175, BatchNorm2d: 4-176, ReLU: 4-177, Conv2d: 4-178, BatchNorm2d: 4-179, ReLU: 4-180, Conv2d: 4-181, BatchNorm2d: 4-182, ReLU: 4-183, Bottleneck: 3-21, Conv2d: 4-184, BatchNorm2d: 4-185, ReLU: 4-186, Conv2d: 4-187, BatchNorm2d: 4-188, ReLU: 4-189, Conv2d: 4-190, BatchNorm2d: 4-191, ReLU: 4-192, Bottleneck: 3-22, Conv2d: 4-193, BatchNorm2d: 4-194, ReLU: 4-195, Conv2d: 4-196, BatchNorm2d: 4-197, ReLU: 4-198, Conv2d: 4-199, BatchNorm2d: 4-200, ReLU: 4-201, Bottleneck: 3-23, Conv2d: 4-202, BatchNorm2d: 4-203, ReLU: 4-204, Conv2d: 4-205, BatchNorm2d: 4-206, ReLU: 4-207, Conv2d: 4-208, BatchNorm2d: 4-209, ReLU: 4-210, Bottleneck: 3-24, Conv2d: 4-211, BatchNorm2d: 4-212, ReLU: 4-213, Conv2d: 4-214, BatchNorm2d: 4-215, ReLU: 4-216, Conv2d: 4-217, BatchNorm2d: 4-218, ReLU: 4-219, Bottleneck: 3-25, Conv2d: 4-220, BatchNorm2d: 4-221, ReLU: 4-222, Conv2d: 4-223, BatchNorm2d: 4-224, ReLU: 4-225, Conv2d: 4-226, BatchNorm2d: 4-227, ReLU: 4-228, Bottleneck: 3-26, Conv2d: 4-229, BatchNorm2d: 4-230, ReLU: 4-231, Conv2d: 4-232, BatchNorm2d: 4-233, ReLU: 4-234, Conv2d: 4-235, BatchNorm2d: 4-236, ReLU: 4-237, Bottleneck: 3-27, Conv2d: 4-238, BatchNorm2d: 4-239, ReLU: 4-240, Conv2d: 4-241, BatchNorm2d: 4-242, ReLU: 4-243, Conv2d: 4-244, BatchNorm2d: 4-245, ReLU: 4-246, Bottleneck: 3-28, Conv2d: 4-247, BatchNorm2d: 4-248, ReLU: 4-249, Conv2d: 4-250, BatchNorm2d: 4-251, ReLU: 4-252, Conv2d: 4-253, BatchNorm2d: 4-254, ReLU: 4-255, Bottleneck: 3-29, Conv2d: 4-256, BatchNorm2d: 4-257, ReLU: 4-258, Conv2d: 4-259, BatchNorm2d: 4-260, ReLU: 4-261, Conv2d: 4-262, BatchNorm2d: 4-263, ReLU: 4-264, Bottleneck: 3-30, Conv2d: 4-265, BatchNorm2d: 4-266, ReLU: 4-267, Conv2d: 4-268, BatchNorm2d: 4-269, ReLU: 4-270, Conv2d: 4-271, BatchNorm2d: 4-272, ReLU: 4-273, Bottleneck: 3-31, Conv2d: 4-274, BatchNorm2d: 4-275, ReLU: 4-276, Conv2d: 4-277, BatchNorm2d: 4-278, ReLU: 4-279, Conv2d: 4-280, BatchNorm2d: 4-281, ReLU: 4-282, Bottleneck: 3-32, Conv2d: 4-283, BatchNorm2d: 4-284, ReLU: 4-285, Conv2d: 4-286, BatchNorm2d: 4-287, ReLU: 4-288, Conv2d: 4-289, BatchNorm2d: 4-290, ReLU: 4-291, Bottleneck: 3-33, Conv2d: 4-292, BatchNorm2d: 4-293, ReLU: 4-294, Conv2d: 4-295, BatchNorm2d: 4-296, ReLU: 4-297, Conv2d: 4-298, BatchNorm2d: 4-299, ReLU: 4-300, Bottleneck: 3-34, Conv2d: 4-301, BatchNorm2d: 4-302, ReLU: 4-303, Conv2d: 4-304, BatchNorm2d: 4-305, ReLU: 4-306, Conv2d: 4-307, BatchNorm2d: 4-308, ReLU: 4-309, Bottleneck: 3-35, Conv2d: 4-310, BatchNorm2d: 4-311, ReLU: 4-312, Conv2d: 4-313, BatchNorm2d: 4-314, ReLU: 4-315, Conv2d: 4-316, BatchNorm2d: 4-317, ReLU: 4-318, Bottleneck: 3-36, Conv2d: 4-319, BatchNorm2d: 4-320, ReLU: 4-321, Conv2d: 4-322, BatchNorm2d: 4-323, ReLU: 4-324, Conv2d: 4-325, BatchNorm2d: 4-326, ReLU: 4-327, Bottleneck: 3-37, Conv2d: 4-328, BatchNorm2d: 4-329, ReLU: 4-330, Conv2d: 4-331, BatchNorm2d: 4-332, ReLU: 4-333, Conv2d: 4-334, BatchNorm2d: 4-335, ReLU: 4-336, Bottleneck: 3-38, Conv2d: 4-337, BatchNorm2d: 4-338, ReLU: 4-339, Conv2d: 4-340, BatchNorm2d: 4-341, ReLU: 4-342, Conv2d: 4-343, BatchNorm2d: 4-344, ReLU: 4-345, Bottleneck: 3-39, Conv2d: 4-346, BatchNorm2d: 4-347, ReLU: 4-348, Conv2d: 4-349, BatchNorm2d: 4-350, ReLU: 4-351, Conv2d: 4-352, BatchNorm2d: 4-353, ReLU: 4-354, Bottleneck: 3-40, Conv2d: 4-355, BatchNorm2d: 4-356, ReLU: 4-357, Conv2d: 4-358, BatchNorm2d: 4-359, ReLU: 4-360, Conv2d: 4-361, BatchNorm2d: 4-362, ReLU: 4-363, Bottleneck: 3-41, Conv2d: 4-364, BatchNorm2d: 4-365, ReLU: 4-366, Conv2d: 4-367, BatchNorm2d: 4-368, ReLU: 4-369, Conv2d: 4-370, BatchNorm2d: 4-371, ReLU: 4-372, Bottleneck: 3-42, Conv2d: 4-373, BatchNorm2d: 4-374, ReLU: 4-375, Conv2d: 4-376, BatchNorm2d: 4-377, ReLU: 4-378, Conv2d: 4-379, BatchNorm2d: 4-380, ReLU: 4-381, Bottleneck: 3-43, Conv2d: 4-382, BatchNorm2d: 4-383, ReLU: 4-384, Conv2d: 4-385, BatchNorm2d: 4-386, ReLU: 4-387, Conv2d: 4-388, BatchNorm2d: 4-389, ReLU: 4-390, Bottleneck: 3-44, Conv2d: 4-391, BatchNorm2d: 4-392, ReLU: 4-393, Conv2d: 4-394, BatchNorm2d: 4-395, ReLU: 4-396, Conv2d: 4-397, BatchNorm2d: 4-398, ReLU: 4-399, Bottleneck: 3-45, Conv2d: 4-400, BatchNorm2d: 4-401, ReLU: 4-402, Conv2d: 4-403, BatchNorm2d: 4-404, ReLU: 4-405, Conv2d: 4-406, BatchNorm2d: 4-407, ReLU: 4-408, Bottleneck: 3-46, Conv2d: 4-409, BatchNorm2d: 4-410, ReLU: 4-411, Conv2d: 4-412, BatchNorm2d: 4-413, ReLU: 4-414, Conv2d: 4-415, BatchNorm2d: 4-416, ReLU: 4-417, Bottleneck: 3-47, Conv2d: 4-418, BatchNorm2d: 4-419, ReLU: 4-420, Conv2d: 4-421, BatchNorm2d: 4-422, ReLU: 4-423, Conv2d: 4-424, BatchNorm2d: 4-425, ReLU: 4-426, Sequential: 2-8, Bottleneck: 3-48, Conv2d: 4-427, BatchNorm2d: 4-428, ReLU: 4-429, Conv2d: 4-430, BatchNorm2d: 4-431, ReLU: 4-432, Conv2d: 4-433, BatchNorm2d: 4-434, Sequential: 4-435, ReLU: 4-436, Bottleneck: 3-49, Conv2d: 4-437, BatchNorm2d: 4-438, ReLU: 4-439, Conv2d: 4-440, BatchNorm2d: 4-441, ReLU: 4-442, Conv2d: 4-443, BatchNorm2d: 4-444, ReLU: 4-445, Bottleneck: 3-50, Conv2d: 4-446, BatchNorm2d: 4-447, ReLU: 4-448, Conv2d: 4-449, BatchNorm2d: 4-450, ReLU: 4-451, Conv2d: 4-452, BatchNorm2d: 4-453, ReLU: 4-454, AdaptiveAvgPool2d: 2-9]

The current error is raised by a linear layer and a shape mismatch.

Based on your screenshot it seems you are still using an object called list, which should also raise the previous error (you are using list.append, so just rename this object to avoid overriding the built-in list).

Also, I’m unsure about the “slicing” of the model as it seems you are appending all layers to modules.

Did you check, if modules contains the wanted layers?

Note that wrapping all child modules in an nn.Sequential container will only add these modules and will skip all functional API calls. For ResNets this flattening opertation will not be included, so I would recommend to use nn.Sequential only for very simple models and verify the new module usage.

PS: you can post code snippets by wrapping them into three backticks ```, which makes debugging easier.

@ptrblck thank you so much! I found the line of code where I over-road the list function and re-wrote it! Now it works fine with the original code. Thank you again.