Hey guys,

I having a really bad time dealing with this issue using Pytorch with CUDA

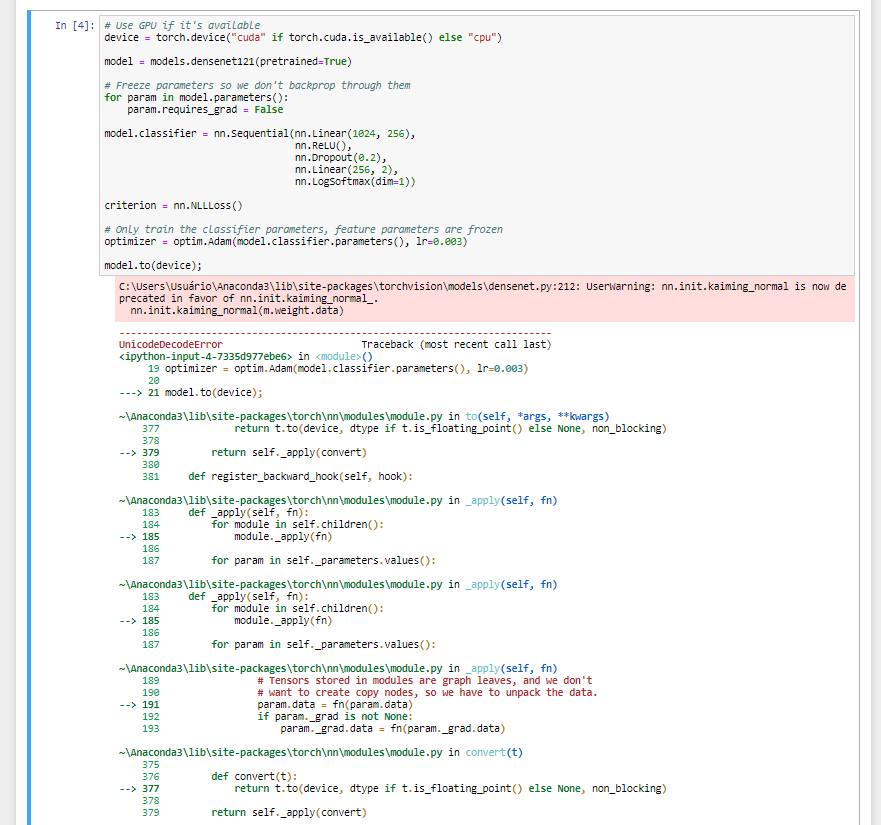

~\Anaconda3\lib\site-packages\torch\cuda_init_.py in _lazy_init()

160 _check_driver()

161 torch._C._cuda_init()

→ 162 _cudart = _load_cudart()

163 _cudart.cudaGetErrorName.restype = ctypes.c_char_p

164 _cudart.cudaGetErrorString.restype = ctypes.c_char_p

~\Anaconda3\lib\site-packages\torch\cuda_init_.py in _load_cudart()

57 # First check the main program for CUDA symbols

58 if platform.system() == ‘Windows’:

—> 59 lib = find_cuda_windows_lib()

60 else:

61 lib = ctypes.cdll.LoadLibrary(None)

~\Anaconda3\lib\site-packages\torch\cuda_init_.py in find_cuda_windows_lib()

30 proc = Popen([‘where’, ‘cudart64*.dll’], stdout=PIPE, stderr=PIPE)

31 out, err = proc.communicate()

—> 32 out = out.decode().strip()

33 if len(out) > 0:

34 if out.find(‘\r\n’) != -1:

UnicodeDecodeError: ‘utf-8’ codec can’t decode byte 0xa0 in position 12: invalid start byte

Thanks in advance,

João Manrique