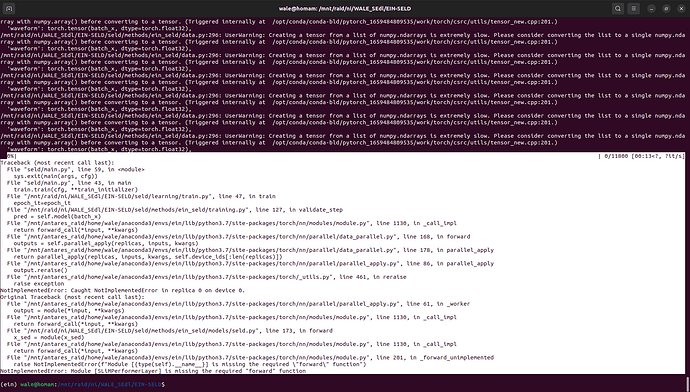

Please I have a similar issue with my script here:

from IPython.core.completerlib import module_list

from numpy.core.fromnumeric import shape

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.nn.modules.container import ModuleList, Sequential

from methods.utils.model_utilities_orth import ( CustomCNN,PositionalEncoding, init_layer) #( DoubleConv,PositionalEncoding, init_layer)

from methods.utils.cbam import CBAM

from performer_stuffs.slim_performer_model import SLiMPerformerLayer

#import performer.models.slim_performer.pytorch.numerator_and_denominator as num_and_den

from performer_stuffs import numerator_and_denominator as num_and_den

class EINV2(nn.Module):

def init(self, cfg, dataset):

super().init()

self.pe_enable = False # Ture | False

if cfg['data']['audio_feature'] == 'logmel&intensity':

self.f_bins = cfg['data']['n_mels']

self.in_channels = 7

n_layers = 2

feature_type = 'logmel&intensity'

self.downsample_ratio = 2 ** 2

self.sed_conv_block1 = nn.Sequential(

CustomCNN(in_channels=4, out_channels=64, kernel_size = 3),

)

# self.cbam_sed1 = CBAM(gate_channels =64)

nn.AvgPool2d(kernel_size=(2, 2))

self.sed_conv_block2 = nn.Sequential(

CustomCNN(in_channels=64, out_channels=128, kernel_size = 3),

nn.AvgPool2d(kernel_size=(2, 2)),

)

# self.cbam_sed2 = CBAM(gate_channels =128)

# nn.AvgPool2d(kernel_size=(2, 2)),

self.sed_conv_block3 = nn.Sequential(

CustomCNN(in_channels=128, out_channels=256, kernel_size = 3),

nn.AvgPool2d(kernel_size=(1, 2)),

)

# self.cbam_sed3 = CBAM(gate_channels =256)

# nn.AvgPool2d(kernel_size=(2, 2))

self.sed_conv_block4 = nn.Sequential(

CustomCNN(in_channels=256, out_channels=512, kernel_size = 3),

nn.AvgPool2d(kernel_size=(1, 2)),

)

# self.cbam_sed4 = CBAM(gate_channels =512)

# nn.AvgPool2d(kernel_size=(2, 2))

self.doa_conv_block1 = nn.Sequential(

CustomCNN(in_channels=self.in_channels, out_channels=64, kernel_size = 3),

nn.AvgPool2d(kernel_size=(2, 2)),

)

# self.cbam_doa1 = CBAM(gate_channels =64)

# nn.AvgPool2d(kernel_size=(2, 2))

self.doa_conv_block2 = nn.Sequential(

CustomCNN(in_channels=64, out_channels=128, kernel_size = 3),

nn.AvgPool2d(kernel_size=(2, 2)),

)

# self.cbam_doa2 = CBAM(gate_channels =128)

nn.AvgPool2d(kernel_size=(2, 2))

self.doa_conv_block3 = nn.Sequential(

CustomCNN(in_channels=128, out_channels=256, kernel_size = 3),

nn.AvgPool2d(kernel_size=(1, 2)),

)

self.doa_conv_block4 = nn.Sequential(

CustomCNN(in_channels=256, out_channels=512, kernel_size = 3),

nn.AvgPool2d(kernel_size=(1, 2)),

)

self.stitch = nn.ParameterList([

nn.Parameter(torch.FloatTensor(64, 2, 2).uniform_(0.1, 0.9)),

nn.Parameter(torch.FloatTensor(128, 2, 2).uniform_(0.1, 0.9)),

nn.Parameter(torch.FloatTensor(256, 2, 2).uniform_(0.1, 0.9)),

])

if self.pe_enable:

self.pe = PositionalEncoding(pos_len=100, d_model=512, pe_type='t', dropout=0.0)

self.sed_trans_track1 = torch.nn.ModuleList([

SLiMPerformerLayer(hidden_dim = 512, ffn_dim= 2048, n_heads=8, feature_type = feature_type,

compute_type =iter, on_gptln =True) for _ in range(n_layers)]

)

self.sed_trans_track2 = torch.nn.ModuleList([

SLiMPerformerLayer(hidden_dim = 512, ffn_dim= 2048, n_heads=8, feature_type = feature_type,

compute_type =iter, on_gptln =True) for _ in range(n_layers)]

)

self.doa_trans_track1 = torch.nn.ModuleList([

SLiMPerformerLayer(hidden_dim = 512, ffn_dim= 2048, n_heads=8, feature_type = feature_type,

compute_type =iter, on_gptln =True) for _ in range(n_layers)]

)

self.doa_trans_track2 = torch.nn.ModuleList([

SLiMPerformerLayer(hidden_dim = 512, ffn_dim= 2048, n_heads=8, feature_type = feature_type,

compute_type =iter, on_gptln =True) for _ in range(n_layers)]

)

self.fc_sed_track1 = nn.Linear(512, 14, bias=True)

self.fc_sed_track2 = nn.Linear(512, 14, bias=True)

self.fc_doa_track1 = nn.Linear(512, 3, bias=True)

self.fc_doa_track2 = nn.Linear(512, 3, bias=True)

self.final_act_sed = nn.Sequential() # nn.Sigmoid()

self.final_act_doa = nn.Tanh()

self.init_weight()

def init_weight(self):

init_layer(self.fc_sed_track1)

init_layer(self.fc_sed_track2)

init_layer(self.fc_doa_track1)

init_layer(self.fc_doa_track2)

def forward(self, x):

"""

x: waveform, (batch_size, num_channels, data_length)

"""

x_sed = x[:, :4]

x_doa = x

# cnn

x_sed = self.sed_conv_block1(x_sed)

x_doa = self.doa_conv_block1(x_doa)

x_sed = self.sed_conv_block4(x_sed)

x_doa = self.doa_conv_block4(x_doa)

x_sed = x_sed.mean(dim=3) # (N, C, T)

x_doa = x_doa.mean(dim=3) # (N, C, T)

#transformer

# if self.pe_enable:

# x_sed = self.pe(x_sed)

# if self.pe_enable:

# x_doa = self.pe(x_doa)

x_sed = self.sed_trans_track1(x_sed)

x_sed = self.sed_trans_track2(x_sed)

x_sed = self.doa_trans_track1(x_doa)

x_sed = self.doa_trans_track1(x_doa)

x_sed = x_sed.permute(2, 0, 1) # (T, N, C)

x_doa = x_doa.permute(2, 0, 1) # (T, N, C)

x_sed_1 = self.sed_trans_track1(x_sed).transpose(0, 1) # (N, T, C)

x_sed_2 = self.sed_trans_track2(x_sed).transpose(0, 1) # (N, T, C)

x_doa_1 = self.doa_trans_track1(x_doa).transpose(0, 1) # (N, T, C)

x_doa_2 = self.doa_trans_track2(x_doa).transpose(0, 1) # (N, T, C)

x_sed_1 = self.final_act_sed(self.fc_sed_track1(x_sed_1))

x_sed_2 = self.final_act_sed(self.fc_sed_track2(x_sed_2))

x_sed = torch.stack((x_sed_1, x_sed_2), 2)

x_doa_1 = self.final_act_doa(self.fc_doa_track1(x_doa_1))

x_doa_2 = self.final_act_doa(self.fc_doa_track2(x_doa_2))

x_doa = torch.stack((x_doa_1, x_doa_2), 2)

output = {

'sed': x_sed,

'doa': x_doa,

}

return output

raise NotImplementedError(f"Module [{type(self).name}] is missing the required "forward" function")

NotImplementedError: Module [ModuleList] is missing the required “forward” function