Hi,

I was trying to profile my code with instructions from https://pytorch.org/docs/stable/bottleneck.html

The code runs without errors in general with cuda on a single GPU.

However, whenever I try to profile with the command

python -m torch.utils.bottleneck /path/to/source/script.py [args]

I get the following error

Traceback (most recent call last):

File "/home/kowshik/anaconda3/lib/python3.6/runpy.py", line 193, in _run_module_as_main

"__main__", mod_spec)

File "/home/kowshik/anaconda3/lib/python3.6/runpy.py", line 85, in _run_code

exec(code, run_globals)

File "/home/kowshik/anaconda3/lib/python3.6/site-packages/torch/utils/bottleneck/__main__.py", line 234, in <module>

main()

File "/home/kowshik/anaconda3/lib/python3.6/site-packages/torch/utils/bottleneck/__main__.py", line 213, in main

autograd_prof_cpu, autograd_prof_cuda = run_autograd_prof(code, globs)

File "/home/kowshik/anaconda3/lib/python3.6/site-packages/torch/utils/bottleneck/__main__.py", line 107, in run_autograd_prof

result.append(run_prof(use_cuda=True))

File "/home/kowshik/anaconda3/lib/python3.6/site-packages/torch/utils/bottleneck/__main__.py", line 100, in run_prof

with profiler.profile(use_cuda=use_cuda) as prof:

File "/home/kowshik/anaconda3/lib/python3.6/site-packages/torch/autograd/profiler.py", line 180, in __enter__

torch.autograd._enable_profiler(profiler_kind)

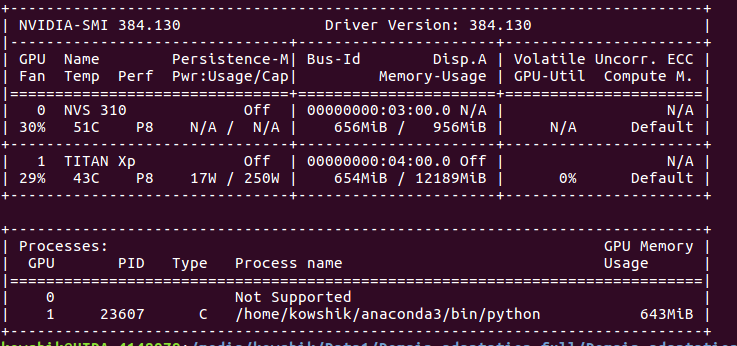

**RuntimeError: /opt/conda/conda-bld/pytorch_1544174967633/work/torch/csrc/autograd/profiler.h:72: all CUDA-capable devices are busy or unavailable**

How do I avoid this