Hey, thanks for the reply. And yeah I did try to run on the CPU. Following error showed up . I am trying to work on it. Thanks for the help and patience.

RuntimeError Traceback (most recent call last)

Cell In[71], line 31

28 prog_bar.set_description(desc=f"Loss: {loss_value:.4f}")

29 return train_loss_list

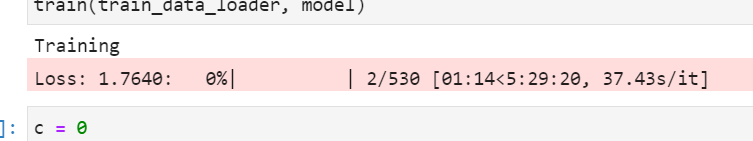

---> 31 train(train_data_loader, model)

Cell In[71], line 18, in train(train_data_loader, model)

16 images = list(image.to(DEVICE) for image in images)

17 targets = [{k: v.to(DEVICE) for k, v in t.items()} for t in targets]

---> 18 loss_dict = model(images, targets)

19 losses = sum(loss for loss in loss_dict.values())

20 loss_value = losses.item()

File ~/.conda/envs/py/lib/python3.9/site-packages/torch/nn/modules/module.py:1194, in Module._call_impl(self, *input, **kwargs)

1190 # If we don't have any hooks, we want to skip the rest of the logic in

1191 # this function, and just call forward.

1192 if not (self._backward_hooks or self._forward_hooks or self._forward_pre_hooks or _global_backward_hooks

1193 or _global_forward_hooks or _global_forward_pre_hooks):

-> 1194 return forward_call(*input, **kwargs)

1195 # Do not call functions when jit is used

1196 full_backward_hooks, non_full_backward_hooks = [], []

File ~/.conda/envs/py/lib/python3.9/site-packages/torchvision/models/detection/generalized_rcnn.py:101, in GeneralizedRCNN.forward(self, images, targets)

94 degen_bb: List[float] = boxes[bb_idx].tolist()

95 torch._assert(

96 False,

97 "All bounding boxes should have positive height and width."

98 f" Found invalid box {degen_bb} for target at index {target_idx}.",

99 )

--> 101 features = self.backbone(images.tensors)

102 if isinstance(features, torch.Tensor):

103 features = OrderedDict([("0", features)])

File ~/.conda/envs/py/lib/python3.9/site-packages/torch/nn/modules/module.py:1194, in Module._call_impl(self, *input, **kwargs)

1190 # If we don't have any hooks, we want to skip the rest of the logic in

1191 # this function, and just call forward.

1192 if not (self._backward_hooks or self._forward_hooks or self._forward_pre_hooks or _global_backward_hooks

1193 or _global_forward_hooks or _global_forward_pre_hooks):

-> 1194 return forward_call(*input, **kwargs)

1195 # Do not call functions when jit is used

1196 full_backward_hooks, non_full_backward_hooks = [], []

File ~/.conda/envs/py/lib/python3.9/site-packages/torchvision/models/detection/backbone_utils.py:58, in BackboneWithFPN.forward(self, x)

56 def forward(self, x: Tensor) -> Dict[str, Tensor]:

57 x = self.body(x)

---> 58 x = self.fpn(x)

59 return x

File ~/.conda/envs/py/lib/python3.9/site-packages/torch/nn/modules/module.py:1194, in Module._call_impl(self, *input, **kwargs)

1190 # If we don't have any hooks, we want to skip the rest of the logic in

1191 # this function, and just call forward.

1192 if not (self._backward_hooks or self._forward_hooks or self._forward_pre_hooks or _global_backward_hooks

1193 or _global_forward_hooks or _global_forward_pre_hooks):

-> 1194 return forward_call(*input, **kwargs)

1195 # Do not call functions when jit is used

1196 full_backward_hooks, non_full_backward_hooks = [], []

File ~/.conda/envs/py/lib/python3.9/site-packages/torchvision/ops/feature_pyramid_network.py:196, in FeaturePyramidNetwork.forward(self, x)

194 inner_top_down = F.interpolate(last_inner, size=feat_shape, mode="nearest")

195 last_inner = inner_lateral + inner_top_down

--> 196 results.insert(0, self.get_result_from_layer_blocks(last_inner, idx))

198 if self.extra_blocks is not None:

199 results, names = self.extra_blocks(results, x, names)

File ~/.conda/envs/py/lib/python3.9/site-packages/torchvision/ops/feature_pyramid_network.py:169, in FeaturePyramidNetwork.get_result_from_layer_blocks(self, x, idx)

167 for i, module in enumerate(self.layer_blocks):

168 if i == idx:

--> 169 out = module(x)

170 return out

File ~/.conda/envs/py/lib/python3.9/site-packages/torch/nn/modules/module.py:1194, in Module._call_impl(self, *input, **kwargs)

1190 # If we don't have any hooks, we want to skip the rest of the logic in

1191 # this function, and just call forward.

1192 if not (self._backward_hooks or self._forward_hooks or self._forward_pre_hooks or _global_backward_hooks

1193 or _global_forward_hooks or _global_forward_pre_hooks):

-> 1194 return forward_call(*input, **kwargs)

1195 # Do not call functions when jit is used

1196 full_backward_hooks, non_full_backward_hooks = [], []

File ~/.conda/envs/py/lib/python3.9/site-packages/torch/nn/modules/container.py:204, in Sequential.forward(self, input)

202 def forward(self, input):

203 for module in self:

--> 204 input = module(input)

205 return input

File ~/.conda/envs/py/lib/python3.9/site-packages/torch/nn/modules/module.py:1194, in Module._call_impl(self, *input, **kwargs)

1190 # If we don't have any hooks, we want to skip the rest of the logic in

1191 # this function, and just call forward.

1192 if not (self._backward_hooks or self._forward_hooks or self._forward_pre_hooks or _global_backward_hooks

1193 or _global_forward_hooks or _global_forward_pre_hooks):

-> 1194 return forward_call(*input, **kwargs)

1195 # Do not call functions when jit is used

1196 full_backward_hooks, non_full_backward_hooks = [], []

File ~/.conda/envs/py/lib/python3.9/site-packages/torch/nn/modules/conv.py:463, in Conv2d.forward(self, input)

462 def forward(self, input: Tensor) -> Tensor:

--> 463 return self._conv_forward(input, self.weight, self.bias)

File ~/.conda/envs/py/lib/python3.9/site-packages/torch/nn/modules/conv.py:459, in Conv2d._conv_forward(self, input, weight, bias)

455 if self.padding_mode != 'zeros':

456 return F.conv2d(F.pad(input, self._reversed_padding_repeated_twice, mode=self.padding_mode),

457 weight, bias, self.stride,

458 _pair(0), self.dilation, self.groups)

--> 459 return F.conv2d(input, weight, bias, self.stride,

460 self.padding, self.dilation, self.groups)

File ~/.conda/envs/py/lib/python3.9/site-packages/torch/utils/data/_utils/signal_handling.py:66, in _set_SIGCHLD_handler.<locals>.handler(signum, frame)

63 def handler(signum, frame):

64 # This following call uses `waitid` with WNOHANG from C side. Therefore,

65 # Python can still get and update the process status successfully.

---> 66 _error_if_any_worker_fails()

67 if previous_handler is not None:

68 assert callable(previous_handler)

RuntimeError: DataLoader worker (pid 10920) is killed by signal: Killed.