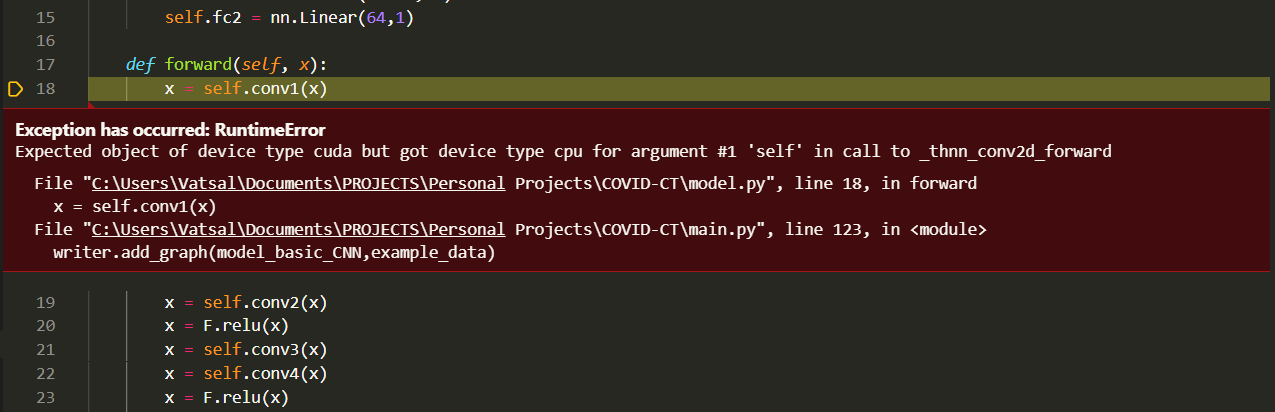

I have inserted network as well as input to the device, yet an error has emerged

This error vanishes when i comment writer.add_graph(model,example_data) in main.py

Exception has occurred: RuntimeError

Expected object of device type cuda but got device type cpu for argument #1 ‘self’ in call to _thnn_conv2d_forward

File “C:\Users\Vatsal\Documents\PROJECTS\Personal Projects\COVID-CT\model.py”, line 18, in forward

x = self.conv1(x)

File “C:\Users\Vatsal\Documents\PROJECTS\Personal Projects\COVID-CT\main.py”, line 123, in

writer.add_graph(model_basic_CNN,example_data)

Full Code Available through the LINK

Following implementation for the model.py was used

import torch

from torch import nn

from torch.nn import functional as F

class basic_CNN(nn.Module):

"""

Basic CNN architecture deployed

"""

def __init__(self):

super(basic_CNN, self).__init__()

self.conv1 = nn.Conv2d(3,32,4,stride=2)

self.conv2 = nn.Conv2d(32,64,3,stride=2)

self.conv3 = nn.Conv2d(64,128,3,stride=2)

self.conv4 = nn.Conv2d(128,256,3,stride=2)

self.fc1 = nn.Linear(43264,64)

self.fc2 = nn.Linear(64,1)

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x)

x = F.relu(x)

x = self.conv3(x)

x = self.conv4(x)

x = F.relu(x)

x = x.view(-1,self.num_flat_features(x))

x = F.relu(self.fc1(x))

x = torch.sigmoid(self.fc2(x))

return x

def num_flat_features(self, x):

size = x.size()[1:]

num_features = 1

for s in size:

num_features *= s

return num_features

Following implementation for the main.py was used

import torch

import torchvision

from DataLoader import *

from model import *

#from utils import *

from torchvision import transforms

from torch.utils.data import DataLoader

from torch.utils.tensorboard import SummaryWriter

import os

import sys

import warnings

import numpy as np

from sklearn.metrics import roc_auc_score, confusion_matrix

log_path = r'C:\Users\Vatsal\Documents\PROJECTS\logs'

writer = SummaryWriter(log_dir=log_path)

#HYPER-PARAMETERS

batchsize=16

bs = 10

lr = 1e-4

device = torch.device("cuda") if torch.cuda.is_available() else torch.device("cpu")

print(device)

normalize = transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])

train_transformer = transforms.Compose([

transforms.Resize(256),

transforms.RandomResizedCrop((224),scale=(0.5,1.0)),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

normalize

])

val_transformer = transforms.Compose([

transforms.Resize(224),

transforms.CenterCrop(224),

transforms.ToTensor(),

normalize

])

train_set = CovidCTDataset(root_dir=os.getcwd(), type='train', transform=train_transformer)

val_set = CovidCTDataset(root_dir=os.getcwd(), type='val', transform=val_transformer)

test_set = CovidCTDataset(root_dir=os.getcwd(), type='test', transform=val_transformer)

print("Size of Train Set {}".format(train_set.__len__()))

print("Size of Val Set {}".format(val_set.__len__()))

print("Size of Test Set {}".format(test_set.__len__()))

train_loader = DataLoader(train_set, batch_size=batchsize, drop_last=False, shuffle=True)

val_loader = DataLoader(val_set, batch_size=batchsize, drop_last=False, shuffle=True)

test_loader = DataLoader(test_set, batch_size=batchsize, drop_last=False, shuffle=False)

def train(epoch, optimizer, criterion):

model.train()

train_loss = 0

train_correct = 0

for batch_index, batch_samples in enumerate(train_loader):

data, target = batch_samples['img'].to(device), batch_samples['label'].to(device)

target = target.view(-1,1)

optimizer.zero_grad()

#print((data).is_cuda)

output = model(data)

loss = criterion(output.float(), target.float())

train_loss = train_loss + loss.item()

loss.backward()

optimizer.step()

return model, train_loss

def val(epoch, criterion):

model.eval()

# Don't update model

val_loss=0

with torch.no_grad():

tpr_list = []

fpr_list = []

predlist=[]

targetlist=[]

# Predict

for batch_index, batch_samples in enumerate(val_loader):

data, target = batch_samples['img'].to(device), batch_samples['label'].to(device)

target = target.view(-1,1)

output = model(data)

loss = criterion(output.float(), target.float())

val_loss += loss.item()

output = [round(int(x)) for x in output]

targetcpu=target.long().cpu().numpy()

predlist=np.append(predlist, np.array(output))

targetlist=np.append(targetlist,targetcpu)

return targetlist, predlist, val_loss

# train

bs = 10

votenum = 10

#warnings.filterwarnings('ignore')

r_list = []

p_list = []

acc_list = []

model = basic_CNN().cuda()

model.to(device)

print(next(model.parameters()).is_cuda)

modelname = 'basic_CNN'

criterion = torch.nn.BCELoss()

optimizer = torch.optim.Adam(model.parameters(), lr=lr)

examples = next(iter(train_loader))

example_data = examples['img']

example_label = examples['label']

img_grid = torchvision.utils.make_grid(example_data)

writer.add_image('Coivid Img',img_grid)

example_data.to(device)

writer.add_graph(model,example_data)

total_epoch = 3000

for epoch in range(1, total_epoch+1):

train_loss = train(epoch=epoch, optimizer=optimizer, criterion=criterion)

targetlist, predlist, val_loss = val(epoch=epoch, criterion=criterion)

TN, FP, FN, TP = confusion_matrix(targetlist, predlist).ravel()

p = TP / (TP + FP)

r = TP / (TP + FN)

F1 = 2 * r * p / (r + p)

acc = (TP + TN) / (TP + TN + FP + FN)

writer.add_scalar('train loss', np.asarray(train_loss[-1]), epoch)

writer.add_scalar('val loss', np.array(val_loss) , epoch)

writer.add_scalar('True Positive', TP, epoch)

writer.add_scalar('False Positive', FP, epoch)

writer.add_scalar('True Negative', TN, epoch)

writer.add_scalar('False Negative', FN, epoch)

writer.add_scalar('Accuracy', acc, epoch)

writer.add_scalar('F1 score', F1, epoch)

writer.add_scalar('Precision', p, epoch)

writer.add_scalar('Recall', r, epoch)

if epoch % votenum == 0:

print('TP=',TP,'TN=',TN,'FN=',FN,'FP=',FP)

print('Total Correct',TP+FP)

print('precision',p)

print('recall',r)

print('F1',F1)

print('acc',acc)

print('\n The epoch is {}, average recall: {:.4f}, average precision: {:.4f},average F1: {:.4f}, average accuracy: {:.4f}'.format(

epoch, r, p, F1, acc))

writer.close()

Additionally,

Can anyone please verify whether for binary classification what should be dimensions of last linear layer should it be torch.nn.Linear(64,1) or I need to change to torch.nn.Linear(64,2)

Any help is deeply appreciated !!!