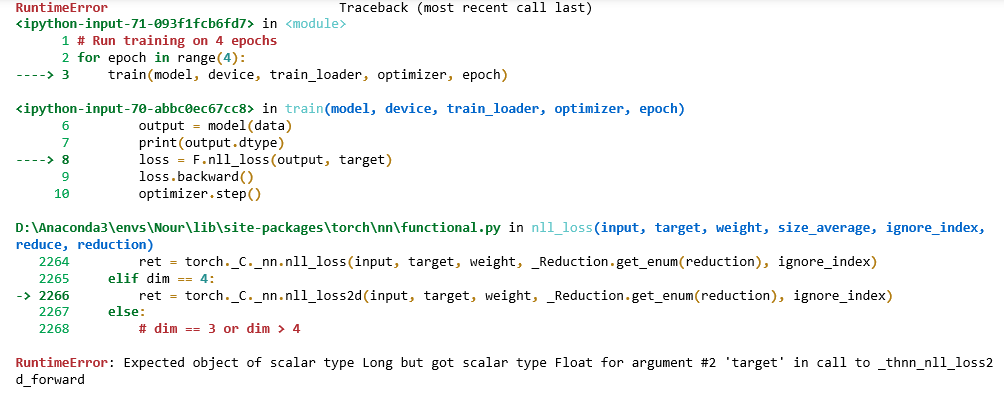

I am trying to implement super resolution with a complex neural network using 91-image and set-5 datasets. I keep getting the following error at the loss functions:

This is my code

import torch

import torch.nn as nn

import torch.nn.functional as F

from torchvision import datasets, transforms

from complexLayers import ComplexBatchNorm2d, ComplexConv2d, ComplexLinear

from complexLayers import ComplexDropout2d, NaiveComplexBatchNorm2d

from complexFunctions import complex_relu, complex_max_pool2d

from datasets import TrainDataset, EvalDataset

from torch.utils.data.dataloader import DataLoader

batch_size = 64

trans = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.5,), (1.0,))])

train_set = TrainDataset('D:\complexPyTorch-master\SRCNN-pytorch-master\91-image_x2.h5')

train_loader = DataLoader(dataset=train_set,

batch_size=64,

shuffle=True,

num_workers=8,

pin_memory=True,

drop_last=True)

test_set = EvalDataset('D:\complexPyTorch-master\SRCNN-pytorch-master\Set5_x2.h5')

test_loader = DataLoader(dataset=test_set, batch_size=1)

class ComplexNet(nn.Module):

def __init__(self):

super(ComplexNet, self).__init__()

self.conv1 = ComplexConv2d(1, 64, kernel_size=9, padding=9 // 2)

self.conv2 = ComplexConv2d(64, 32, kernel_size=5, padding=5 // 2)

self.conv3 = ComplexConv2d(32, 1, kernel_size=5, padding=5 // 2)

#self.relu = complex_relu()

def forward(self,x):

x = complex_relu(self.conv1(x))

x = complex_relu(self.conv2(x))

x = self.conv3(x)

x = complex_relu(x) #this should have bene in init but it is placed here instead

#x = self.fc2(x)

x = x.abs()

#x = F.log_softmax(x)

return x

device = device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model = ComplexNet().to(device)

optimizer = torch.optim.SGD(model.parameters(), lr=5e-3, momentum=0.9)

def train(model, device, train_loader, optimizer, epoch):

model.train()

for batch_idx, (data, target) in enumerate(train_loader):

data, target =data.to(device).type(torch.complex64), target.to(device)

optimizer.zero_grad()

output = model(data)

print(output.dtype)

loss = F.nll_loss(output, target)

loss.backward()

optimizer.step()

if batch_idx % 100 == 0:

print('Train Epoch: {:3} [{:6}/{:6} ({:3.0f}%)]\tLoss: {:.6f}'.format(

epoch,

batch_idx * len(data),

len(train_loader.dataset),

100. * batch_idx / len(train_loader),

loss.item())

)

for epoch in range(4):

train(model, device, train_loader, optimizer, epoch)