Hi,

I am trying to set up an RNN capable of utilizing a GPU but packed_padded_sequence gives me a

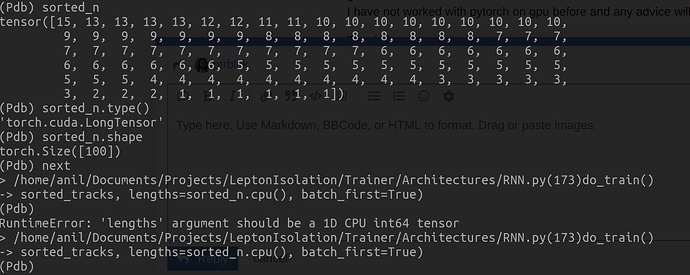

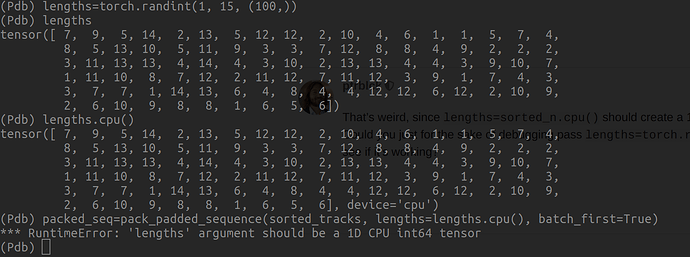

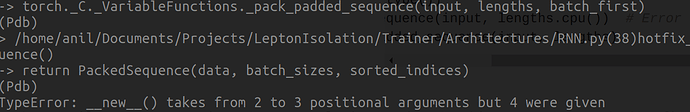

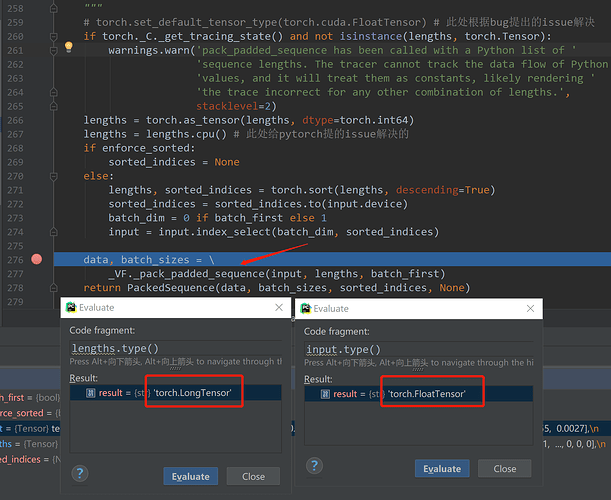

RuntimeError: 'lengths' argument should be a 1D CPU int64 tensor

here is how I direct gpu computing

parser = argparse.ArgumentParser(description='Trainer')

parser.add_argument('--disable-cuda', action='store_true',

help='Disable CUDA')

args = parser.parse_args()

args.device = None

if not args.disable_cuda and torch.cuda.is_available():

args.device = torch.device('cuda')

torch.set_default_tensor_type(torch.cuda.FloatTensor)

else:

args.device = torch.device('cpu')

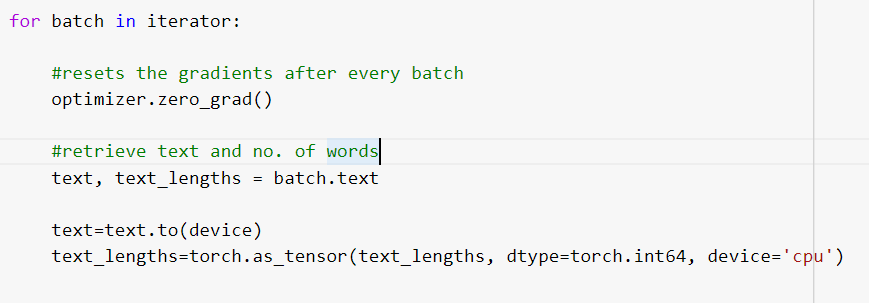

here is the relevant part of the code.

def Tensor_length(track):

"""Finds the length of the non zero tensor"""

return int(torch.nonzero(track).shape[0] / track.shape[1])

.

.

.

def forward(self, tracks, leptons):

self.rnn.flatten_parameters()

# list of event lengths

n_tracks = torch.tensor([Tensor_length(tracks[i])

for i in range(len(tracks))])

sorted_n, indices = torch.sort(n_tracks, descending=True)

sorted_tracks = tracks[indices].to(args.device)

sorted_leptons = leptons[indices].to(args.device)

# import pdb; pdb.set_trace()

output, hidden = self.rnn(pack_padded_sequence(sorted_tracks,

lengths=sorted_n.cpu().numpy(),

batch_first=True)) # this gives the error

combined_out = torch.cat((sorted_leptons, hidden[-1]), dim=1)

out = self.fc(combined_out) # add lepton data to the matrix

out = self.softmax(out)

return out, indices # passing indices for reorganizing truth

I have tried everything from casting sorted_n to a long tensor to having it be a list, but i aways seem to get the same error.

I have not worked with pytorch on gpu before and any advice will be greatly appreciated.

Thanks!