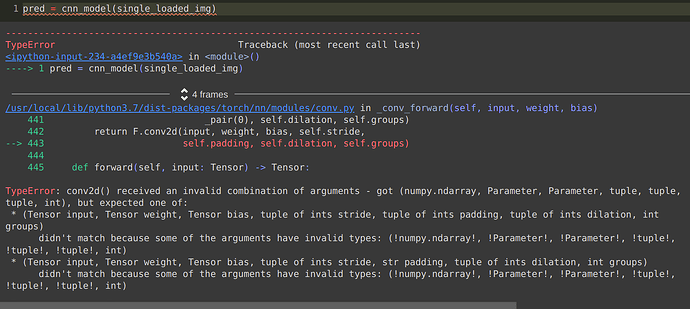

I’m trying to predict cloth categories from fashion-mnist images. I referred code from here. I have created the whole model with architecture as in pic attached below and to get image pixels for prediction once the model is trained, I used the below code. The ‘images’ loaded is of size (10000,784) so I reshaped and created a new numpy.ndarray with shape (10000,28,28,1). I took 1st image from this(that is of type ndarray) and when I was trying to convert it to tensor(using transforms.ToTensor()) and then use it for prediction, I was facing dimension issues. So I referred this discussion. But I’m facing a new error. I’m stuck at the last step and am unable to use the model to predict cloth category for a single image. Please suggest a solution, since I have tried every possible solution. Also please point where I might be wrong.

#for fashion-mnist images, got pixels as (10000,784) from here

from mnist import MNIST

mndata = MNIST(‘./FashionMNIST/raw/’)

images, labels = mndata.load_testing()

#tried this code from the discussion but its throwing another error

single_loaded_img = test_pred_data_loader.dataset.data[0]

single_loaded_img = single_loaded_img[None, None]

pred = cnn_model(single_loaded_img)

architecture diagram-

Network(

(conv1): Conv2d(1, 6, kernel_size=(5, 5), stride=(1, 1))

(batchN1): BatchNorm2d(6, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(conv2): Conv2d(6, 12, kernel_size=(5, 5), stride=(1, 1))

(fc1): Linear(in_features=192, out_features=120, bias=True)

(batchN2): BatchNorm1d(120, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(fc2): Linear(in_features=120, out_features=60, bias=True)

(out): Linear(in_features=60, out_features=10, bias=True)

)