Hi all,

I’m fairly new to both ANNs and pytorch, so please excuse any rookie mistakes.

I’ve come up with a simple NN for regression (predicting prices for a specific stock), and I used a single dropout layer for my first hidden layer (note that I managed to over-complicate my model with high number of neurons without any signs of over-fitting, I would suppose due to my high number of samples).

All my modules are vanilla (no customized forward, back propagation etc since that was my first approach anyway), and it looks something along the lines of:

net = torch.nn.Sequential(

torch.nn.Linear(NUMBER_OF_FEATURES, h1n),

torch.nn.LeakyReLU(),

torch.nn.Dropout(0.15),

torch.nn.Linear(h1n, h2n),

torch.nn.LeakyReLU(),

torch.nn.Linear(h2n, h3n),

torch.nn.LeakyReLU(),

torch.nn.Linear(h3n, 1)

).to(device)

with MSELoss as the loss function and Adam as my optimizer.

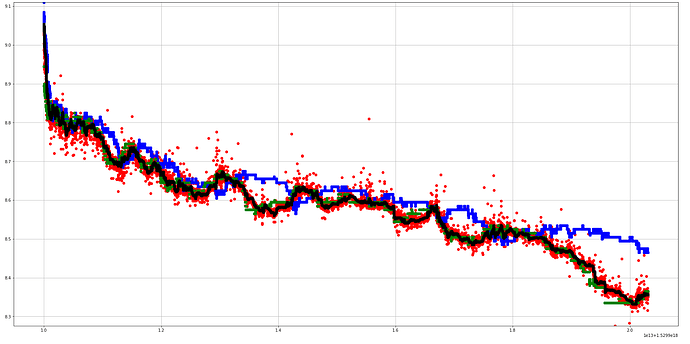

My issue is that when I mistakenly forgot my model in training mode, the predictions were extraordinarily good (MSE Loss ~0.0001X, Pearson Correlation Coefficient ~0.99X on my dedicated test set). On the other hand, when I noticed my mistake and turned my model in evaluation mode, the predictions went way off, with my plot showing a clear shift of my predictions:

(Excuse the missing legend:

Blue is

Actual Current PriceGreen is

Actual Future Price aka my targetRed is

Predicted Future PriceBlack is

EMA-Smoothed Predicted Future Price

The plot shows the status when predicting in training mode.

I’m missing a plot for eval mode, but you could think of almost the exact same line shifted by y = y + 0.3(which is a lot/unacceptable for my case)

I’ve searched the forums and the documentation and my understanding is that the only difference in net.eval() is that the temporarily dropped neurons are re-enabled with their weights adjusted to account for the last training epoch.

Given that I tried some suggestions for similar issues I’ve found (eg, not using dropout at all, in which case my model overfitted, using weight decay instead of dropout in which case my results turned out to be way worse) and also tried lots of different setups with no success (all my efforts resulted in either overfitting or poor results), what options do I have? Are there any known issues with the dropout module? Is this behavior expected? Would using my model in training model for actual prediction be an option or would I be leaking information?

I would highly appreciate any help.

Thanks in advance