I registered a sequential variable and saved the result into a member variable called toPatchEmbedding.

toPatchEmbedding = register_module("toPatchEmbedding", torch::nn::Sequential(torch::nn::LayerNorm(torch::nn::LayerNormOptions({256})), torch::nn::Linear(256, 512), torch::nn::LayerNorm(torch::nn::LayerNormOptions({512})))));

In the forward function later on I tried to use torch::Tensor x = toPatchEmbedding->forward(input); where input is a tensor with shape (16, 14, 14, 256).

For some reason the forward call to layerNorm throws an exception.

“Exception: Exception 0xe06d7363 encountered at address 0x7ffd8e384b2c” and I don’t know how to debug it.

According to the documentation for the python function at: LayerNorm — PyTorch 2.1 documentation

the normalizedShape applies the function on the last n dimensions of the tensor. Where n is the size of the normalizedShape list. So the size of the input tensor matches but it is still not working.

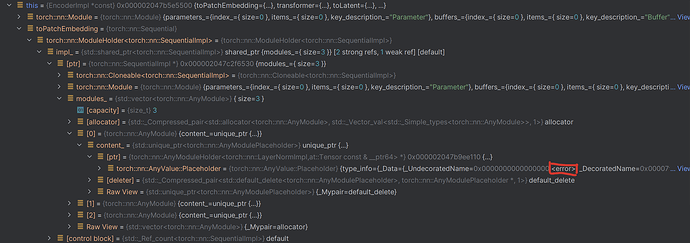

I also noticed that when I run the program through the debugger, when the sequential variable is initialized if you look at the member variables of the module some variables have a value of so I think that the problem is with initializing the sequential variable.

If this is relevant it is on the GPU.

Thank you for helping ![]()