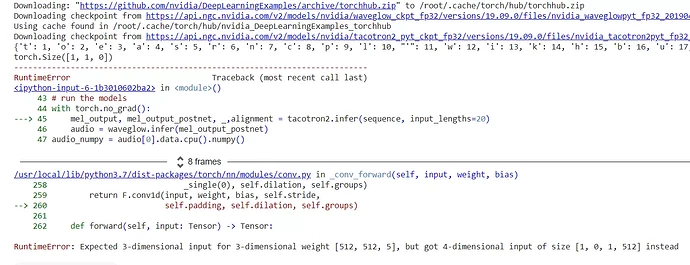

I have tried multiple approaches but unable to resolve this issue. I have trained my TTS model but after running the inference code I’m facing this issue. I have done implementing reshape and unsqueeze method but nothing worked. The model is a trained tacotron2 model for generating audio samples. However my input size is already of 3 dimension as shown in the ss attached. Please help me resolve

this!!!

(embedding): Embedding(148, 512)

(encoder): Encoder(

(convolutions): ModuleList(

(0): Sequential(

(0): ConvNorm(

(conv): Conv1d(512, 512, kernel_size=(5,), stride=(1,), padding=(2,))

)

(1): BatchNorm1d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(1): Sequential(

(0): ConvNorm(

(conv): Conv1d(512, 512, kernel_size=(5,), stride=(1,), padding=(2,))

)

(1): BatchNorm1d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(2): Sequential(

(0): ConvNorm(

(conv): Conv1d(512, 512, kernel_size=(5,), stride=(1,), padding=(2,))

)

(1): BatchNorm1d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(lstm): LSTM(512, 256, batch_first=True, bidirectional=True)

Actual Code:

waveglow_path =

torch.hub.load('nvidia/DeepLearningExamples:torchhub',

'nvidia_waveglow')

#waveglow = torch.load(waveglow_path,

map_location=torch.device("cpu")) # waveglow_pt is the path to the

.pth file

#waveglow = torch.load(waveglow_path)

waveglow = waveglow_path.to('cuda')

waveglow.eval()

#waveglow.cpu().eval()

tacotron2 = torch.hub.load('nvidia/DeepLearningExamples:torchhub',

'nvidia_tacotron2')

model_path = '/content/TTS_Nigerian'

#tacotron2 = torch.load(model_path,

map_location=torch.device("cpu"))

#tacotron2 = torch.load(model_path)

tacotron2.load_state_dict(torch.load(model_path)['state_dict'])

print(tacotron2.state_dict)

tacotron2 = tacotron2.to('cuda')

tacotron2.eval()

tk = Tokenizer()

text = "Now please click on the start button or say 'start' to

proceed"

tk.fit_on_texts(text)

sequence = np.array(tk.texts_to_sequences([text]))[None, :]

#sequence = sequence.astype(int)

sequence = torch.from_numpy(sequence).to(device='cuda', dtype =

torch.int64)

print(sequence.shape)

torch.squeeze(sequence,[1,3])

with torch.no_grad():

mel_output, mel_output_postnet, _,alignment = tacotron2.infer(sequence, input_lengths=20)

audio = waveglow.infer(mel_output_postnet)

audio_numpy = audio[0].data.cpu().numpy()

sampling_rate = 22050

write("audio.wav", sampling_rate, audio_numpy)

from IPython.display import Audio

Audio(audio_numpy, rate=sampling_rate)