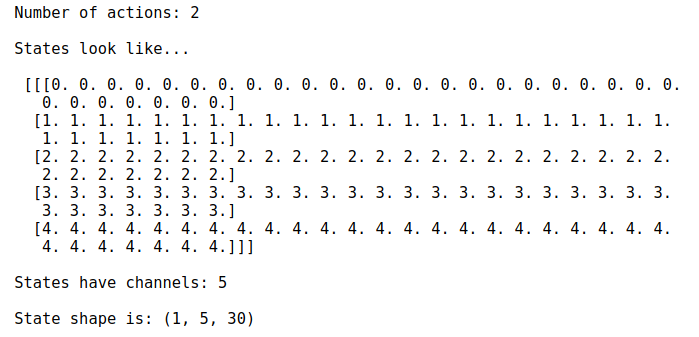

I’m trying to pass a state with 5 channels and 30 data points (time steps) through a 1d conv net with a dense network. I think my problem is in reshaping my data because I keep getting expected or less then expected number of inputs as errors. Anyone have any ideas what could be my problem? Here is a blog on the kind of data I am working with except I am using it in a reinforcement learning environment.

Here is dummy data with 5 channels

a = np.zeros(30) + 0

b = np.zeros(30) + 1

c = np.zeros(30) + 2

d = np.zeros(30) + 3

e = np.zeros(30) + 4

encoded_array = np.column_stack((a, b, c, d, e))

state = np.transpose(encoded_array)

state = state.reshape(1, 5, 30)

It has a shape of (1, 5, 30) and looks like this…

Here is my netowrk

import torch

import torch.nn as nn

import torch.nn.functional as F

import numpy as np

class QNetwork(nn.Module):

def __init__(self, state_size, action_size, seed, conv1=64, conv2=64, fc1_units=128, fc2_units=8):

super(QNetwork, self).__init__()

self.seed = torch.manual_seed(seed)

self.conv1 = nn.Conv1d(in_channels=5, out_channels=conv1, kernel_size=2)

self.conv2 = nn.Conv1d(in_channels=conv1, out_channels=conv2, kernel_size=2)

self.mp = nn.MaxPool1d(2)

self.fc1 = nn.Linear(1792, fc1_units)

self.fc2 = nn.Linear(fc1_units, fc2_units)

self.fc3 = nn.Linear(fc2_units, action_size)

def forward(self, x):

#x = torch.randn(1, 5, 30)

in_size = x.size(0)

x = F.relu(self.conv1(x))

x = F.relu(self.conv2(x))

x = x.view(in_size, -1)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

return self.fc3(x)

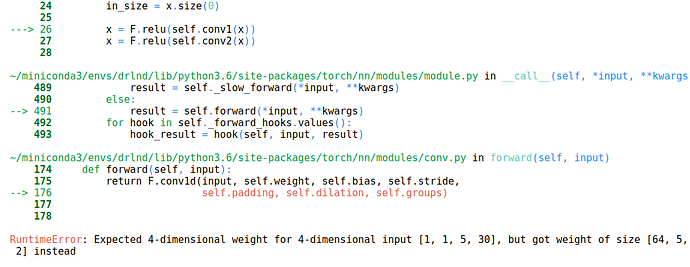

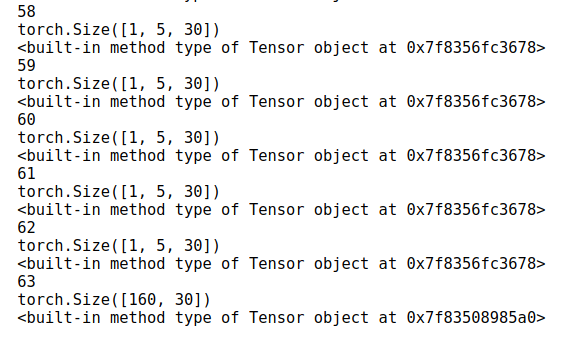

Errors I am getting