File “main1.py”, line 221, in <module>

reid_trainer.train(epoch, train_loader)

File “/app/train.py”, line 44, in train

self._forward()

File “/app/train.py”, line 76, in _forward

score, feat = self.model(self.data)

File “/opt/conda/lib/python3.6/site-packages/torch/nn/modules/module.py”, line 489, in call

result = self.forward(*input, **kwargs)

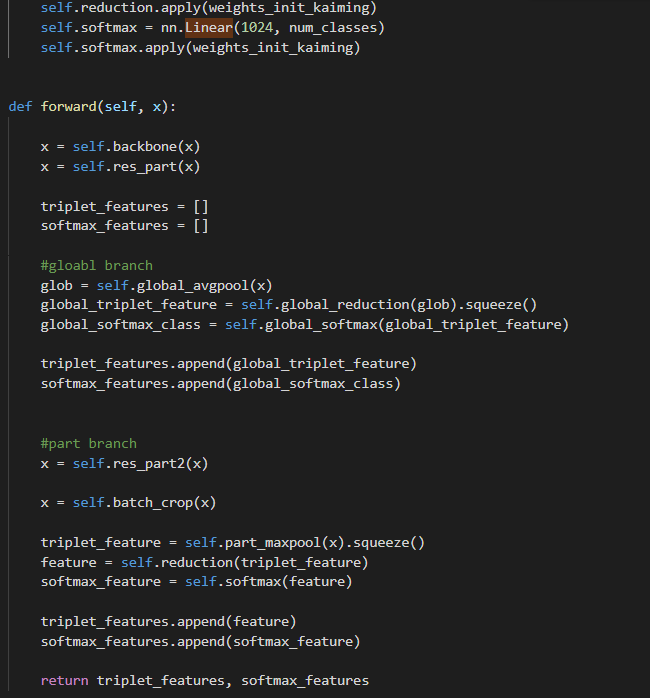

File “/app/model.py”, line 125, in forward

feature = self.reduction(triplet_feature)

File “/opt/conda/lib/python3.6/site-packages/torch/nn/modules/module.py”, line 489, in call

result = self.forward(*input, **kwargs)

File “/opt/conda/lib/python3.6/site-packages/torch/nn/modules/container.py”, line 92, in forward

input = module(input)

File “/opt/conda/lib/python3.6/site-packages/torch/nn/modules/module.py”, line 489, in call

result = self.forward(*input, **kwargs)

File “/opt/conda/lib/python3.6/site-packages/torch/nn/modules/batchnorm.py”, line 60, in forward

self._check_input_dim(input)

File “/opt/conda/lib/python3.6/site-packages/torch/nn/modules/batchnorm.py”, line 243, in _check_input_dim

.format(input.dim()))

ValueError: expected 4D input (got 2D input)

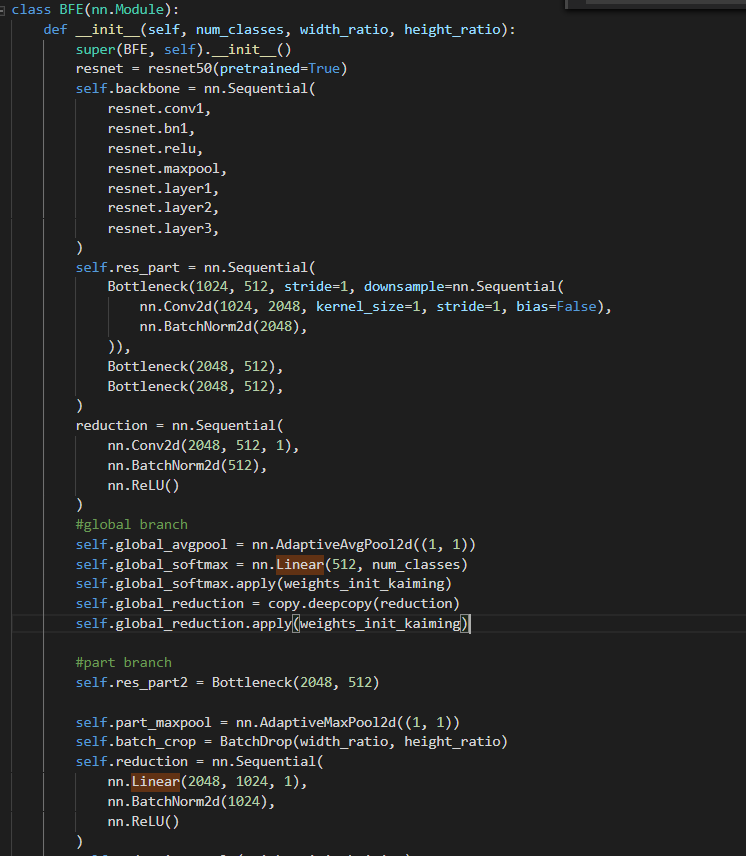

I created model based on GitHub - daizuozhuo/batch-dropblock-network: Official source code of "Batch DropBlock Network for Person Re-identification and Beyond" (ICCV 2019)

problem here is that even though I just trained with 224x224 size images (which is fixed size of imagenet) the following error log comes up. which part of the model should I fix?

can anybody tell me where the problem is?? thank you