This is my model:

def make_residual_fc(input,R=8,k=2):

in_channel=input.shape[1]

out_channel=int(in_channel/R)

fc1=nn.Linear(in_channel,out_channel)

fc_list=[]

for i in range(k):

fc=nn.Linear(out_channel,2*in_channel)

fc_list.append(fc)

fc2=nn.ModuleList(fc_list)

return fc1,fc2

class residual(nn.Module):

def __init__(self,R=8,k=2):

super(residual, self).__init__()

self.avg=nn.AdaptiveAvgPool2d((1,1))

self.relu=nn.ReLU(inplace=True)

self.R=R

self.k=k

def forward(self,x):

x=self.avg(x)

fc1,fc2=make_residual_fc(x,self.R,self.k)

x=torch.squeeze(x)

x=fc1(x)

x=self.relu(x)

result_list=[]

for i in range(self.k):

result=fc2[i](x)

result=2*torch.sigmoid(result)-1

result_list.append(result)

return result_list

class Dynamic_relu_b(nn.Module):

def __init__(self,R=8,k=2):

super(Dynamic_relu_b, self).__init__()

self.lambda_alpha=1

self.lambda_beta=0.5

self.R=R

self.k=k

self.init_alpha=torch.zeros(self.k)

self.init_beta=torch.zeros(self.k)

self.init_alpha[0]=1

self.init_beta[0]=1

for i in range(1,k):

self.init_alpha[i]=0

self.init_beta[i]=0

self.residual=residual(self.R,self.k)

def forward(self,input):

delta=self.residual(input)

in_channel=input.shape[1]

bs=input.shape[0]

alpha=torch.zeros((self.k,bs,in_channel))

beta=torch.zeros((self.k,bs,in_channel))

for i in range(self.k):

for j,c in enumerate(range(0,in_channel*2,2)):

alpha[i,:,j]=delta[i][:,c]

beta[i,:,j]=delta[i][:,c+1]

alpha1=alpha[0]

beta1=beta[0]

max_result=self.dynamic_function(alpha1,beta1,input,0)

for i in range(1,self.k):

alphai=alpha[i]

betai=beta[i]

result=self.dynamic_function(alphai,betai,input,i)

max_result=torch.max(max_result,result)

return max_result

def dynamic_function(self,alpha,beta,x,k):

init_alpha=self.init_alpha[k]

init_beta=self.init_beta[k]

alpha=init_alpha+self.lambda_alpha*alpha

beta=init_beta+self.lambda_beta*beta

bs=x.shape[0]

channel=x.shape[1]

results=torch.zeros_like(x)

for i in range(bs):

for c in range(channel):

results[i,c,:,:]=x[i,c]*alpha[i,c]+beta[i,c]

return results

image=torch.rand((4,20,100,100))

image=image.cuda()

act=Dynamic_relu_b()

act(image)

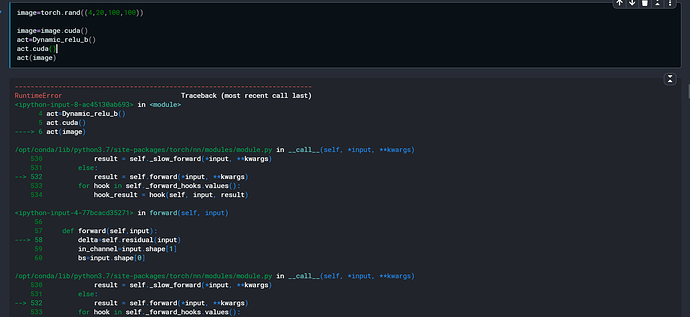

This is the error

RuntimeError Traceback (most recent call last)

<ipython-input-7-436962e32e77> in <module>

3 image=image.cuda()

4 act=Dynamic_relu_b()

----> 5 act(image)

/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py in __call__(self, *input, **kwargs)

530 result = self._slow_forward(*input, **kwargs)

531 else:

--> 532 result = self.forward(*input, **kwargs)

533 for hook in self._forward_hooks.values():

534 hook_result = hook(self, input, result)

<ipython-input-6-77bcacd35271> in forward(self, input)

56

57 def forward(self,input):

---> 58 delta=self.residual(input)

59 in_channel=input.shape[1]

60 bs=input.shape[0]

/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py in __call__(self, *input, **kwargs)

530 result = self._slow_forward(*input, **kwargs)

531 else:

--> 532 result = self.forward(*input, **kwargs)

533 for hook in self._forward_hooks.values():

534 hook_result = hook(self, input, result)

<ipython-input-6-77bcacd35271> in forward(self, x)

24 fc1,fc2=make_residual_fc(x,self.R,self.k)

25 x=torch.squeeze(x)

---> 26 x=fc1(x)

27 x=self.relu(x)

28 result_list=[]

/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py in __call__(self, *input, **kwargs)

530 result = self._slow_forward(*input, **kwargs)

531 else:

--> 532 result = self.forward(*input, **kwargs)

533 for hook in self._forward_hooks.values():

534 hook_result = hook(self, input, result)

/opt/conda/lib/python3.7/site-packages/torch/nn/modules/linear.py in forward(self, input)

85

86 def forward(self, input):

---> 87 return F.linear(input, self.weight, self.bias)

88

89 def extra_repr(self):

/opt/conda/lib/python3.7/site-packages/torch/nn/functional.py in linear(input, weight, bias)

1368 if input.dim() == 2 and bias is not None:

1369 # fused op is marginally faster

-> 1370 ret = torch.addmm(bias, input, weight.t())

1371 else:

1372 output = input.matmul(weight.t())

RuntimeError: Expected object of device type cuda but got device type cpu for argument #1 'self' in call to _th_addmm

I print the X device before fc1(X) code

It was on cuda!

So why this error happen?