Hi!

I am following this example: https://medium.com/coinmonks/an-introduction-to-pytorch-by-working-on-the-moons-dataset-using-neural-networks-369b1ac6ccad

and it works.

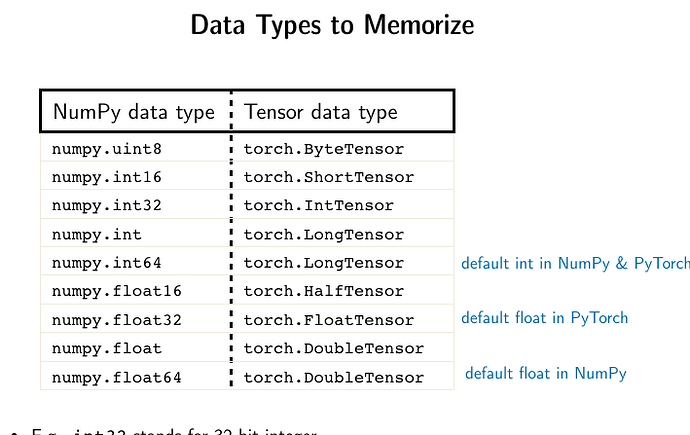

When I am trying to use CrossEntropyLoss() instead of formulas it gives me this error: Expected object of type torch.LongTensor but found type torch.FloatTensor for argument #2 ‘target’.

The changes in the initial code are in ** text **

input_size = 2

hidden_size = 3 # randomly chosen

output_size = 1 # we want it to return a number that can be used to calculate the difference from the actual number

class NeuralNetwork():

def __init__(self, input_size, hidden_size, output_size):

# weights

self.W1 = torch.randn(input_size, hidden_size, requires_grad=True)

self.W2 = torch.randn(hidden_size, hidden_size, requires_grad=True)

self.W3 = torch.randn(hidden_size, output_size, requires_grad=True)

# Add bias

self.b1 = torch.randn(hidden_size, requires_grad=True)

self.b2 = torch.randn(hidden_size, requires_grad=True)

self.b3 = torch.randn(output_size, requires_grad=True)

def forward(self, inputs):

z1 = inputs.mm(self.W1).add(self.b1)

a1 = 1 / (1 + torch.exp(-z1))

z2 = a1.mm(self.W2).add(self.b2)

a2 = 1 / (1 + torch.exp(-z2))

output = a2.mm(self.W3).add(self.b3)

**# output = nn.LogSoftmax(z3)**

return output

epochs = 10000

learning_rate = 0.005

model = NeuralNetwork(input_size, hidden_size, output_size)

inputs = torch.tensor(X, dtype=torch.float)

labels = torch.tensor(y, dtype=torch.float)

#store all the loss values

losses = []

**criterion = nn.CrossEntropyLoss()**

for epoch in range(epochs):

# forward function

output = model.forward(inputs)

#BinaryCrossEntropy formula

**loss = criterion(output, labels)**

**#loss = -((labels * torch.log(output)) + (1 - labels) * torch.log(1 - output)).sum()**

# print(loss.item)

#Log the log so we can plot it later

losses.append(loss.item())

#calculate the gradients of the weights wrt to loss

loss.backward()

#adjust the weights based on the previous calculated gradients

model.W1.data -= learning_rate * model.W1.grad

model.W2.data -= learning_rate * model.W2.grad

model.W3.data -= learning_rate * model.W3.grad

model.b1.data -= learning_rate * model.b1.grad

model.b2.data -= learning_rate * model.b2.grad

model.b3.data -= learning_rate * model.b3.grad

#clear the gradients so they wont accumulate

model.W1.grad.zero_()

model.W2.grad.zero_()

model.W3.grad.zero_()

model.b1.grad.zero_()

model.b2.grad.zero_()

model.b3.grad.zero_()

#print("Final loss: ", losses[-1])

plt.plot(losses)

Thank you!