I trained a detectron2 model on some data that I plan to use, but unfortunately the team I’m working with is requiring that I use another software that they have already implemented (its a long story). Anyways now I’m having to try to convert this model into a software known as MVtec Halcon. The issue is that it gives the following error when trying to upload the onnx model of the detectron2 that I trained:

Unhandled program exception:

HALCON operator error

while calling ‘read_dl_model’ in procedure ‘main’ line: 4.

Error occurred while reading an ONNX model (HALCON error code: 7801)

Extended error code: 5:

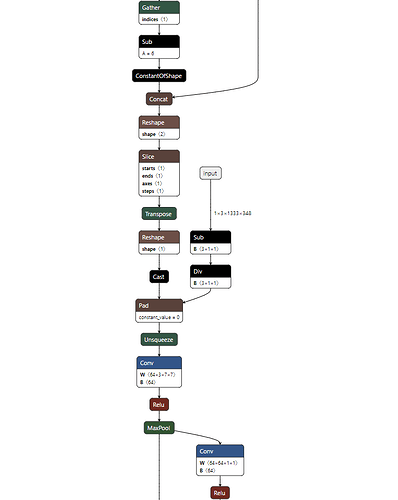

Shape dimension for graph input must be 4

What I have come to understand from this issue is that it requires me to convert the detectron2 model into onnx before calling the model.eval() method which removes the batch_size from the input requirements for the detectron2 model.

I imagine this isn’t common, but I was curious to if anyone has had any experience with this and could provide any tips. I can’t seem to figure it out and I can’t seem to find anything online relating to it.

ADDITIONAL INFO: The batch_size that I trained on is 1 (the images are massive), so maybe there is a way I can export the model in the typical way and then just unsqueeze the input? Open to any ideas.