I misunderstood your question " If you want to get the flattened output wouldn’t a view operation just work?"

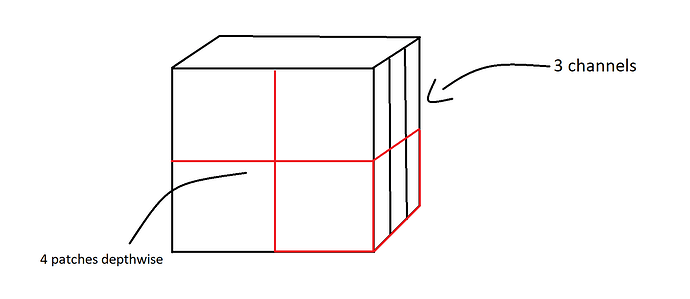

My intention is to first extract the patches and then flatten each patch. The first code which you sent was almost already perfect for my needs, just that it messed up the order of the patch values.

Update:

I guess I found the missing modification to your code:

>>> x = torch.arange(3* 8* 8).reshape((3,8,8))

>>> out = x.unfold(2, 4, 4).unfold(1, 4, 4)

>>> out = torch.transpose(out, 3,4) <---- transposing it gives me my desired output

>>> out = out.permute(1, 2, 0, 3, 4)

>>> out = out.contiguous().view(out.size(0)*out.size(1), -1)

>>> out[0]

tensor([ 0, 1, 2, 3, 8, 9, 10, 11, 16, 17, 18, 19, 24, 25,

26, 27, 64, 65, 66, 67, 72, 73, 74, 75, 80, 81, 82, 83,

88, 89, 90, 91, 128, 129, 130, 131, 136, 137, 138, 139, 144, 145,

146, 147, 152, 153, 154, 155])

which is equivalent to my before posted code

>>> res[0]

tensor([ 0, 1, 2, 3, 8, 9, 10, 11, 16, 17, 18, 19, 24, 25,

26, 27, 64, 65, 66, 67, 72, 73, 74, 75, 80, 81, 82, 83,

88, 89, 90, 91, 128, 129, 130, 131, 136, 137, 138, 139, 144, 145,

146, 147, 152, 153, 154, 155])