Hi, I’m very new to this but foolishly tried to implement my own novel architecture. It’s very similar to a transformer, in that the pipeline takes in a “batch of articles headlines” tensor, passes it through the encoder half of the Transformer, then through several transformations and versions of the transformer encoder. The output is then summed into a single vector and softmaxed. I also wrote a custom loss function.

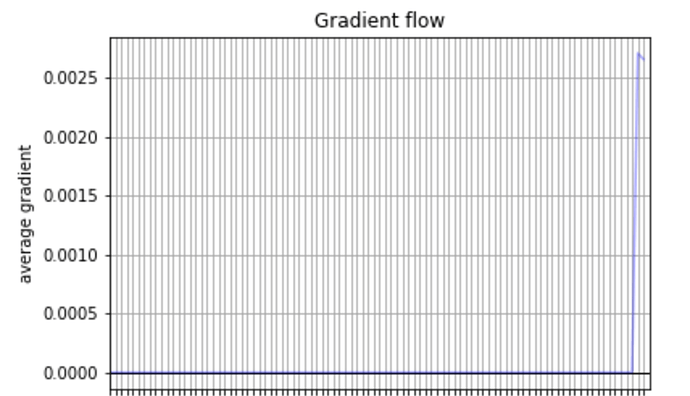

When I graph the average gradients per layer, it looks like:

]Meaning the last layer’s gradients are huge and the rest are barely existent. (This is after clipping gradient to 0.25; before that it was at around 1e7)

Here’s my relevant code:

def forward(self, example):

art_mask = Variable((example.Art[:,:,0] != 0).unsqueeze(-2)).cuda()

Art = Variable(example.Art).cuda()

# To give context to article embeddings, pass through Transformer Encoder block

Art = self.encoder_block(self.position_layer(Art), art_mask).cuda()

Tsf = example.Tsf.cuda().repeat((1,Art.shape[1],1)).cuda()

# Concanetante Tsf to Art

Art = torch.cat((Art, Tsf), dim=2).cuda()

# Convert Art to Ent and construct ent_mask

Ent = Art[example.EntArt[:,:,0], example.EntArt[:,:,1],:].cuda()

ent_mask = (example.EntArt[:,:,0] == -1).unsqueeze(-2).cuda()

# Pass to graph block, alternating layers of Relational Attn and Entity Self Attn

Ent = self.graph_block(Ent, ent_mask, example.RelEnt).cuda()

# Slice and reorder Ent into assets tensor

A = len(self.assets_list)

Ass = torch.full((A,1), -1, dtype=torch.long).cuda()

for i,uri in enumerate(self.assets_list):

if uri in example.AssetIndex:

Ass[i] = example.AssetIndex[uri]

Assets = Ent[Ass, :, :].squeeze(1).cuda()

mask = Ass.unsqueeze(2).repeat(1,Assets.shape[1],Assets.shape[2]).cuda()

Assets = Assets.masked_fill(mask == -1, -1e9).cuda()

Assets = Assets.sum(dim = 1).squeeze(1)

Assets = torch.matmul(Assets, self.W).cuda()

bias = torch.zeros((1)).cuda()

Assets = torch.cat((Assets, bias)).cuda()

return self.softmax(Assets)

"""

prices[i] is normalized closing / opening

:param prices <torch.Tensor(batch_size, len(assets))>

"""

def loss_f(model, XY):

examples, prices = XY

portfolios = torch.stack([model.forward(ex) for ex in examples], dim=0)

prices = Variable(prices)

# safe asset (US Dollars) at prices[:,-1]

prices = torch.cat((prices, torch.ones((prices.shape[0], 1), dtype=torch.float)), dim = 1).cuda()

return -torch.sum(portfolios * prices) / 4 # batch size

The layer with the large gradients is the coefficients and biases for a LayerNorm layer at the end of the transformer-esque architecture