Hi there,

I’m fine-tuning Faster R-CNN on my custom dataset using the official PyTorch tutorial about fine-tuning object detection models. For an unknown reason the model succeeds in learning how to detect the objects of my dataset but the mean average precision is always 0. I know that the model succeeds in doing so because I checked the outputs of model during evaluation and I saw that the predicted bounding boxes match quite well with the ground truth bounding boxes. My conclusion is that the calculation of the evaluation metrics doesn’t work and I’d like to know why.

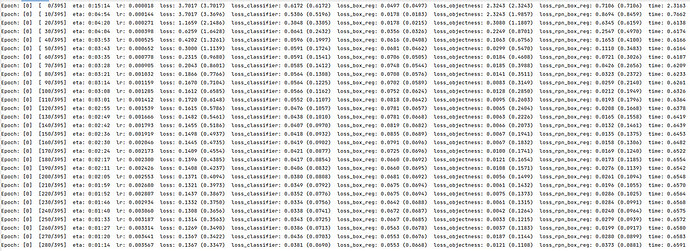

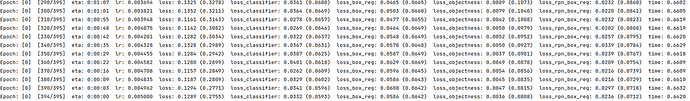

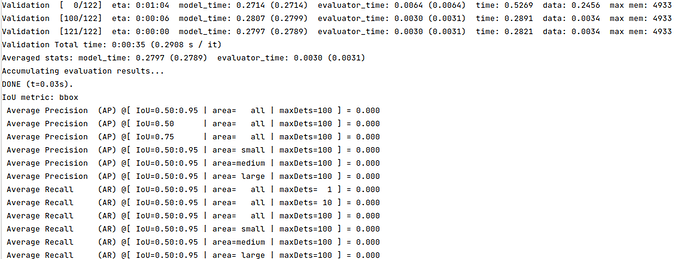

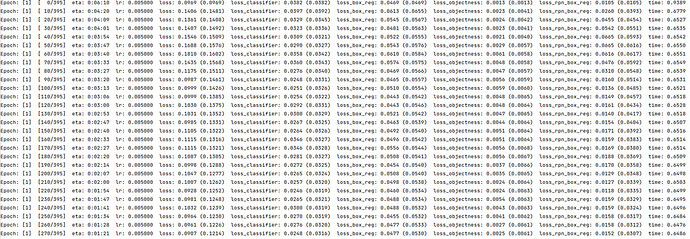

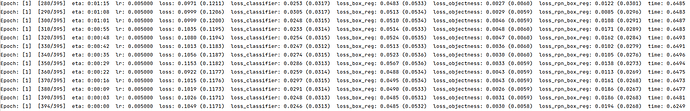

Firstly, here’s what I see in the console while training and evaluating for two epochs:

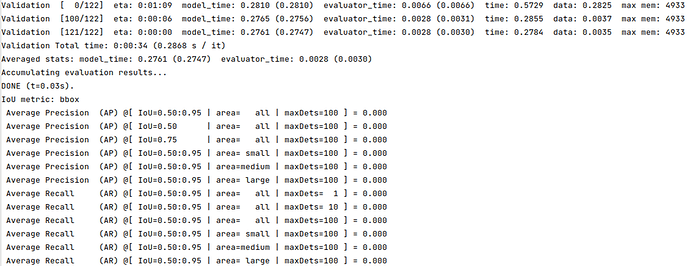

Secondly, here are some hyperparameters of my training:

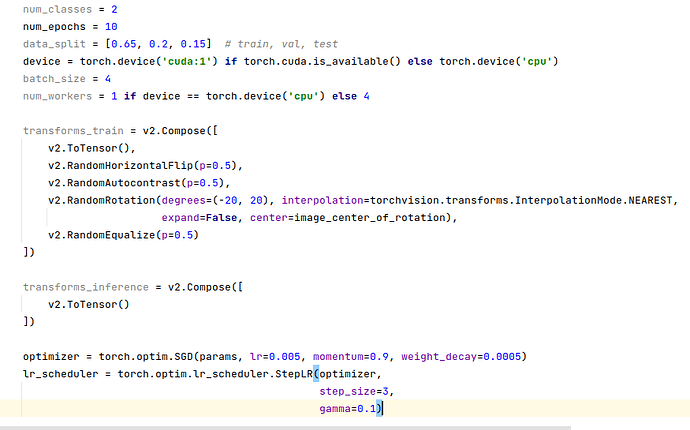

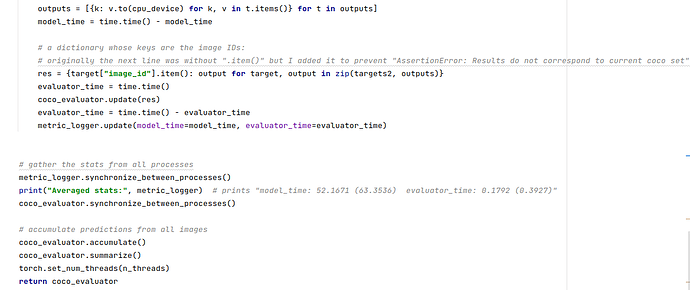

Here’s the evaluation function that I use:

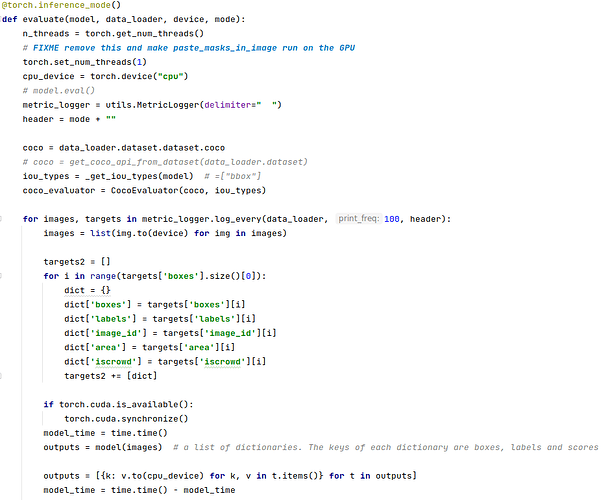

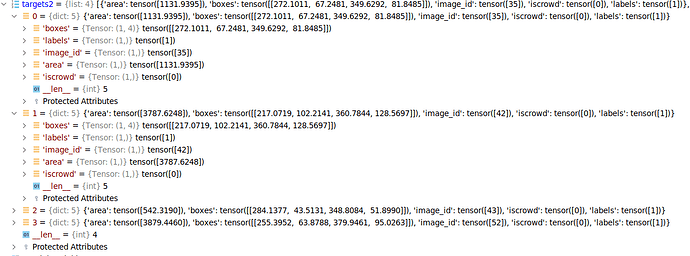

Here are the ground truth labels in the evaluation:

Could anyone guide me in finding the reason for the which the evaluation metrics are always zero while the model succeeds in finding the bounding boxes?

In addition, does anyone know if it makes sense that the box regression loss barely improves during the training?

David.