Hi all,

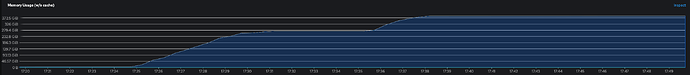

I want to extract flow and rgb features from video frames. To do so I want to use the resnet50 model for rgb features and the raft_large for flow features. Unfortunately, even extracting features for only a single frame (2 for flow) has my memory demands spike to ~400 GB please see screenshot.

Both models are loaded from the PyTorch library with default weights. To ensure same shape of rgb and flow features the last iteration of the raft_models output is used in pytorchs flow_to_image function which is subsequently also fed into the resnet50. Features from resnet 50 are extracted at the ‘flatten’ layer of the network.

I’ve used the following code to disable gradient computation, hoping to decrease the overhead of the model, but the improvements are minor at best.

for param in model.parameters():

param.requires_grad = False

What else could I do to decrease memory consumption?

Best, Jona