I have images that are not natural images and I am planning to use Inception V3 for fine-tuning.

I have this code (with some slight modification/addition) from PyTorch training and Piotr’s code here. However, I get an error:

print("Initializing Datasets and Dataloaders...")

# Create training and validation datasets

image_datasets = {x: datasets.ImageFolder(os.path.join(data_dir, x), data_transforms[x]) for x in ['train', 'val', 'test']}

# Create training and validation dataloaders

print('batch size: ', batch_size)

dataloaders_dict = {x: torch.utils.data.DataLoader(image_datasets[x], batch_size=batch_size, shuffle=True, num_workers=4) for x in ['train', 'val', 'test']}

mean = 0.

std = 0.

nb_samples = 0.

for data in dataloaders_dict['train']:

print(print(len(data)))

print("data[0] shape: ", data[0].shape)

batch_samples = data[0].size(0)

data = data[0].view(batch_samples, data[0].size(1), -1)

mean += data[0].mean(2).sum(0)

std += data[0].std(2).sum(0)

nb_samples += batch_samples

mean /= nb_samples

std /= nb_samples

I assume, I need to use similar code as above for also dataloaders_dict['val'] and dataloaders_dict['test']. Please correct me if I am wrong. Anyhow, the error is:

Waiting for W&B process to finish... **(success).**

Synced 4 W&B file(s), 0 media file(s), 0 artifact file(s) and 0 other file(s)

Successfully finished last run (ID:bwpak3jh). Initializing new run:

Tracking run with wandb version 0.12.11

Initializing Datasets and Dataloaders...

batch size: 512

2

None

data[0] shape: torch.Size([512, 3, 299, 299])

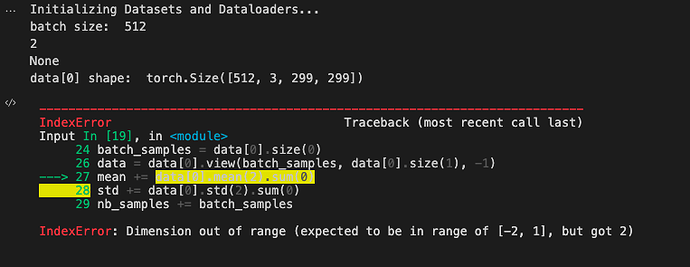

--------------------------------------------------------------------------- IndexError Traceback (most recent call last) Input In [19], in <module> **24** batch_samples = data[0].size(0) **26** data = data[0].view(batch_samples, data[0].size(1), -1) ---> 27 mean += data[0].mean(2).sum(0) **28** std += data[0].std(2).sum(0) **29** nb_samples += batch_samples IndexError: Dimension out of range (expected to be in range of [-2, 1], but got 2)

For now, I have commented the “Normalize” part of data transform and using it only for resizing matter. I am no sure how exactly Data Transform should look like if we have the initial goal of calculating mean and std tensors. Please let me know if it is wrong.

input_size = 299 # for Inception V3

data_transforms = {

'train': transforms.Compose([

transforms.RandomResizedCrop(input_size),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

#transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

'val': transforms.Compose([

transforms.Resize(input_size),

transforms.CenterCrop(input_size),

transforms.ToTensor(),

#transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

'test': transforms.Compose([

transforms.Resize(input_size),

transforms.CenterCrop(input_size),

transforms.ToTensor(),

#transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

])

}