to_tensor = transforms.ToTensor()

img = to_tensor(train_dataset[0]['image'])

img

Converts my images values between 0 and 1 which is expected. It also converts img which is an ndarray to a torch.Tensor

Previously, without using to_tensor (which I need it now), the following code snippet worked (not sure if this is best way to find means and stds of train set, however now doesn’t work. How can I make it work?

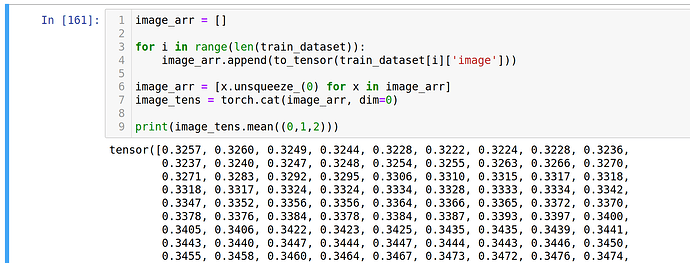

image_arr = []

for i in range(len(train_dataset)):

image_arr.append(to_tensor(train_dataset[i]['image']))

print(np.mean(image_arr, axis=(0, 1, 2)))

print(np.std(image_arr, axis=(0, 1, 2)))

The error is:

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

<ipython-input-147-0e007c030629> in <module>

4 image_arr.append(to_tensor(train_dataset[i]['image']))

5

----> 6 print(np.mean(image_arr, axis=(0, 1, 2)))

7 print(np.std(image_arr, axis=(0, 1, 2)))

<__array_function__ internals> in mean(*args, **kwargs)

~/anaconda3/lib/python3.7/site-packages/numpy/core/fromnumeric.py in mean(a, axis, dtype, out, keepdims)

3333

3334 return _methods._mean(a, axis=axis, dtype=dtype,

-> 3335 out=out, **kwargs)

3336

3337

~/anaconda3/lib/python3.7/site-packages/numpy/core/_methods.py in _mean(a, axis, dtype, out, keepdims)

133

134 def _mean(a, axis=None, dtype=None, out=None, keepdims=False):

--> 135 arr = asanyarray(a)

136

137 is_float16_result = False

~/anaconda3/lib/python3.7/site-packages/numpy/core/_asarray.py in asanyarray(a, dtype, order)

136

137 """

--> 138 return array(a, dtype, copy=False, order=order, subok=True)

139

140

ValueError: only one element tensors can be converted to Python scalars