Hi, I am curious about calculating model size (MB) for NN in pytorch.

Is it equivalent to the size of the file from torch.save(model.state_dict(),‘example.pth’)?

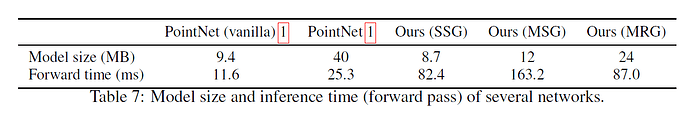

Please refer to the image below from PointNet++

I wouldn’t depend on the stored size, as the file might be compressed.

Instead you could calculate the number of parameters and buffers, multiply them with the element size and accumulate these numbers as seen here:

model = models.resnet18()

param_size = 0

for param in model.parameters():

param_size += param.nelement() * param.element_size()

buffer_size = 0

for buffer in model.buffers():

buffer_size += buffer.nelement() * buffer.element_size()

size_all_mb = (param_size + buffer_size) / 1024**2

print('model size: {:.3f}MB'.format(size_all_mb))

> model size: 44.629MB

Thank you so much!!! @ptrblck

Hello @ptrblck I have a question concerning activation’s, do they affect model size? because I am working on model quantization and when i represent activation in lower precision exp 4bits my accuracy drops a lot and model dosen’t learn so what i did is quantize weights in 4 bits and kept activation’s in 8bits so it works now but my question is how does this affect model size.

Thank you in advance

I think it depends on what you would consider counts as the “model size”. I would probably not count the activations to the model size as they usually depend on the input shape as well as the model architecture. Thus, I would only count the internal model states (parameters, buffers, etc.) to the model size.

Thank you for your reply. So if I understand correctly if we quantize activations this will reduce model size in training and not in inference ?

Activations are used in both use cases. However, since they do not need to be stored during inference (as there won’t be any gradient calculation) the peak memory usage could still decrease but the expected savings wouldn’t be as large as during training.

I understand, is there still a way to calculate the capacity of activations storage to see how does it affect my model size even though it might be not a lot in inference but I want to deploy my model on embedded system which is critical for me to do a deep analysis since I am also a Phd student and I am new to this topic.

Again thank you for your reply I tried to post on a more relevant section and no one replied. So I posted here since I see you a lot in these blogs and you are so helpful ![]()

Yes, you could use e.g. forward hooks to record the output shapes of each module. However, depending on the model architecture, registering forward hooks to each module might be a bit tricky as you could easily track the same output multiple times if your modules are nested.

Maybe torch.fx would be helpful here which might allow you to analyze the actual computation graph (and then the output activation shapes).

How can I make this work on the torchvision quantized ResNet models? The above code works for the standard ResNet18 but for this quantized version it gives a size of 0.000MB.

thank you ptrblck.

I’m newbie in pytorch, so I google all problems I met, and everytime your answer save me.