I would like to fine tune a pre-trained GAN available online using my own images. For example, BigGAN, which was trained on ImageNet, can generate realistic images. However, I want to generate medical images. How can I fine tune the pre-train models?

https://github.com/ajbrock/BigGAN-PyTorch

The inked repository has a fine tuning section which explains how the code can be used to fine tune a model using a custom dataset. Generally, I would recommend to also take a look at the paper and check how the model was trained at all. Since your images are coming from another domain (medical images) you would have to experiment if the mentioned fine tuning recipe is working or if you would have to train a model from scratch.

To use the model on my own dataset, should I need the same GPU resources?

Not necessarily, but you would have to check e.g. the memory requirements and see if you could fit a similar batch size in your setup, as the model convergence (or training hyperparamters) might be sensitive it the batch size.

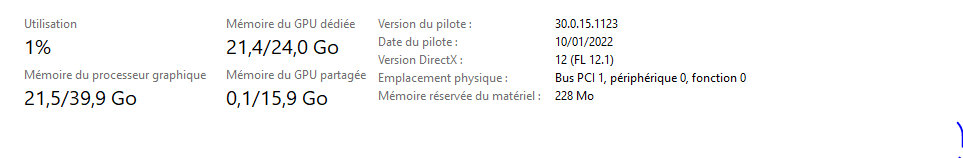

Can I train the BigGAN model from scratch based in the below configuration:

- Training dataset: 7000 of medical image samples

- NVIDIA GeForce RTX 3090

I guess it could based on the description:

You will first need to figure out the maximum batch size your setup can support. The pre-trained models provided here were trained on 8xV100 (16GB VRAM each) which can support slightly more than the BS256 used by default. Once you’ve determined this, you should modify the script so that the batch size times the number of gradient accumulations is equal to your desired total batch size (BigGAN defaults to 2048).

Note also that this script uses the --load_in_mem arg, which loads the entire (~64GB) I128.hdf5 file into RAM for faster data loading. If you don’t have enough RAM to support this (probably 96GB+), remove this argument.

so you should try to run it and see if you are seeing any unexpected issues.

I would like to use the basic architecture of BigGAN ‘generator and discriminator’ (basic residual block to build the model) without any other details and without pretrained models just to support high resolution images. is that possible?

Hi Najeh_Nafti,

Were you able to fine-tune BIGGAN on the medical dataset? If so, could you share how you approached it?